By early 2026, running a large language model (LLM) costs less than a cup of coffee per million tokens. But that doesn’t mean all providers are equal. If you’re using AI for customer service, document analysis, or internal tools, choosing the wrong model could be costing you thousands every month. The truth? You don’t need the most expensive model. You need the one that matches your task.

What You’re Really Paying For

LLM pricing isn’t just about input and output tokens anymore. It’s about how well the model handles your work-and how much waste you’re creating along the way. Most providers charge per million tokens for input (what you send in) and output (what you get back). But here’s the catch: if your model keeps misunderstanding your requests, you’ll send the same prompt five times. That’s not just slow-it’s expensive. For example, a simple customer support chatbot might use 500 tokens per conversation. At $0.25 per million input tokens, that’s $0.000125 per chat. Sounds cheap? Now multiply that by 10,000 chats a month. You’re at $1.25. But if you pick a model that gets it wrong 30% of the time? Now you’re paying for 13,000 chats instead of 10,000. Suddenly, that $1.25 becomes $1.63. And if you’re using a premium model at $15 per million tokens? That same error rate could cost you $19.50.OpenAI: The Premium Standard

OpenAI’s gpt-5.2 series is still the benchmark for high-end tasks. Its flagship, gpt-5.2-pro, costs $21.00 per million input tokens and $168.00 per million output tokens. That’s steep. But if you’re processing legal contracts, financial reports, or complex code generation, it often pays off. Why? Accuracy. OpenAI’s models consistently score highest on benchmarks like MMLU and HumanEval. A fintech startup in Austin switched from Claude Opus to GPT-4o for regulatory document review and saw a 41% drop in human edits. That saved them $8,000 a month in labor costs-even though their API bill went up $1,200. For lighter tasks, OpenAI offers gpt-4o mini at $0.15 per million input and $0.60 per million output. It’s fast, reliable for basic Q&A, and handles images and JSON output well. Many developers use it as the first layer in a cascade system: handle simple queries here, escalate only what’s complex.Anthropic: The Smart Cache

Anthropic’s Claude 3.5 Sonnet charges $2.50 per million input tokens and $3.125 per million output. At first glance, that’s higher than Google’s Gemini 1.5 Flash. But Anthropic’s secret sauce? Cache pricing. If you ask the same question twice-like “What’s our Q1 revenue?”-Claude remembers the answer and charges you 25% less on the second pass. For enterprise workflows with repetitive queries (customer FAQs, internal policy checks, report templates), this can slash costs by 40-60%. A logistics company in Chicago cut its monthly AI bill from $14,000 to $5,200 by switching to Claude and restructuring its prompts to reuse cached responses. The downside? It’s harder to predict costs. Unlike OpenAI’s flat rates, Anthropic’s cache system adds hidden variables. One developer on Reddit said, “I thought I was saving money. Then I checked the logs-half my requests weren’t caching because I changed one word in the prompt.”

Google: The Context King

Google’s Gemini 1.5 Flash is the cheapest high-capacity option: $0.35 per million input, $1.05 per million output. But its real advantage? A 1.0 million token context window. That’s 20 times larger than most competitors. What does that mean? You can feed it an entire 500-page PDF, a year of Slack logs, or a full product manual in one go. No need to chunk, summarize, or stitch results. A legal tech firm in Denver used to pay $4,500 a month to process contracts with GPT-4o. They switched to Gemini 1.5 Flash and cut costs to $1,100-because they no longer needed three API calls per document. The trade-off? Speed. Gemini 1.5 Flash isn’t as sharp on nuanced reasoning as Claude Sonnet or GPT-4o. It’s great for summarization and retrieval, less so for complex logic.Meta’s Llama 3: The Budget Giant

If you’re not locked into cloud APIs, Llama 3 8B is the best-kept secret. Through third-party hosts like SiliconFlow, it costs just $0.06 per million tokens input and output. Yes, you read that right. Six cents. But here’s the catch: it only handles 8K context. That’s fine for chatbots or short summaries, but useless for long documents. The 70B version ($0.70 per million tokens) is better for enterprise use, but costs 7x more than the 8B model. Many startups use Llama 3 as a fallback. If a user’s query is simple (“What are your hours?”), route it to Llama 3. If it’s complex (“Explain the tax implications of this merger”), escalate to Claude Sonnet. One SaaS company in Austin reduced its AI spend by 63% using this hybrid model.

Who Wins for Your Use Case?

Here’s how to match the model to your job:- Simple chatbots, FAQs, form filling: Use GPT-4o mini or Claude Haiku. Both cost under $0.30 per million tokens. Haiku fails 37% of coding tasks, but 92% of “reset password” requests? Perfect.

- Document summarization, internal knowledge bases: Go with Gemini 1.5 Flash. Its massive context window cuts API calls in half. You’ll save more than you spend on the extra per-token cost.

- Legal, financial, or technical analysis: Claude 3.5 Sonnet is the sweet spot. It’s 40% cheaper than GPT-4o and matches 92% of its accuracy. Add cache reuse, and you’re at 60% savings.

- Code generation, complex reasoning: Stick with GPT-5.2-pro. It’s expensive, but if your engineers spend 20 hours a week fixing AI-generated code, you’re losing more than the API cost.

Hidden Costs No One Talks About

Most people think pricing is simple: tokens in, tokens out. Reality is messier.- Context padding: If you send 100 tokens of prompt but your model needs 512 to work, you’re paying for 412 wasted tokens. One startup wasted $3,200/month until they trimmed their prompts.

- Token counting differences: The same sentence might be 150 tokens on OpenAI and 168 on Anthropic. That 12% difference adds up fast.

- Non-English text: Chinese, Arabic, and Japanese text use 25-40% more tokens than English. If you’re serving global users, your costs will rise even if usage stays flat.

- Retry rates: A model that’s 10% less accurate might force you to retry 3x more often. MIT’s “cost per useful output token” metric shows Claude 3.5 Sonnet beats GPT-4o on this for document processing-even at the same price.

What’s Next? The Price War Is Just Starting

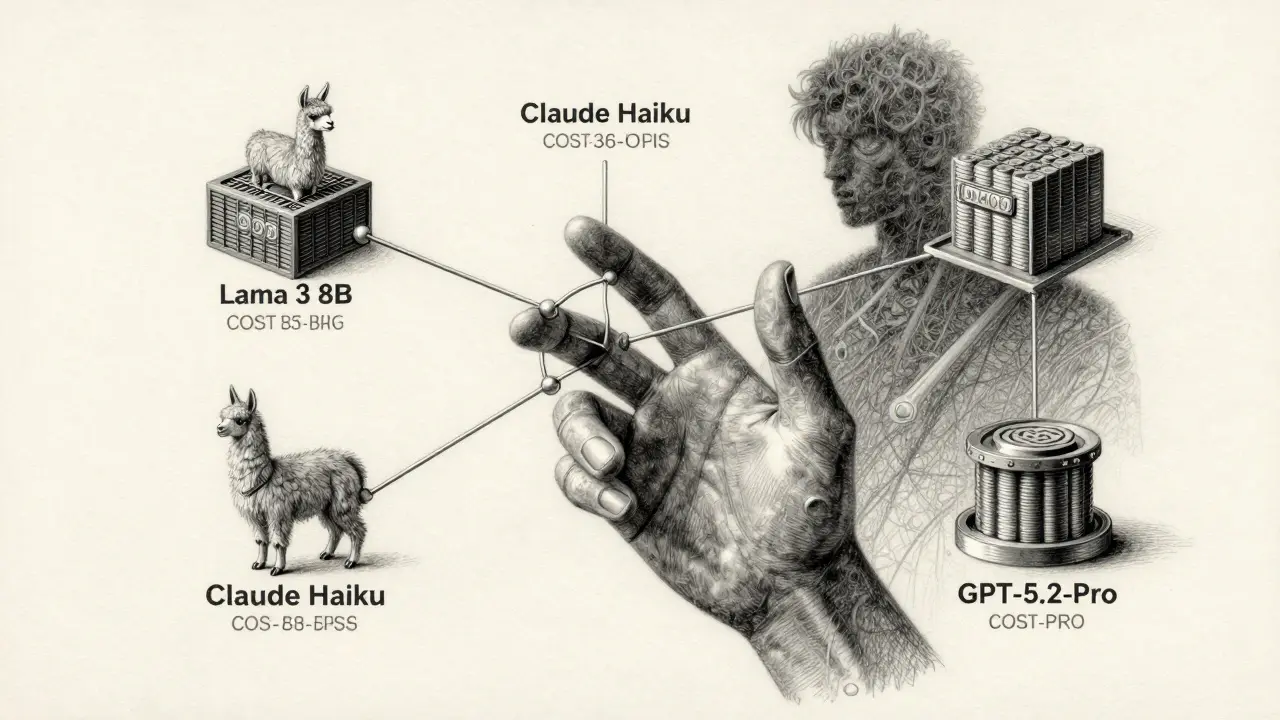

In 2023, GPT-4 cost $60 per million tokens. Today, it’s under $2. That’s a 98% drop in under three years. Forrester predicts another 50% drop by late 2026 as Meta, Mistral, and others push open models into enterprise. Right now, the smartest move isn’t picking the cheapest model. It’s building a tiered system:- Start with Llama 3 8B for simple queries.

- Use GPT-4o mini or Claude Haiku for moderate tasks.

- Reserve Claude Sonnet or GPT-4o for high-stakes reasoning.

- Let Gemini 1.5 Flash handle long documents.

Which LLM provider is cheapest overall in 2026?

For raw cost, Meta’s Llama 3 8B at $0.06 per million tokens is the cheapest option available. But it only handles 8K context, so it’s useless for long documents or complex tasks. For a balance of cost and capability, Claude 3 Haiku ($0.25 input) and GPT-4o mini ($0.15 input) are the most affordable for everyday use. Google’s Gemini 1.5 Flash at $0.35 input offers the best value if you’re processing long files.

Is OpenAI still the best for accuracy?

Yes-for complex reasoning, coding, and multi-step analysis, OpenAI’s gpt-5.2 models still lead in benchmarks like MMLU and HumanEval. But for 80% of business tasks-customer service, summarization, basic data extraction-Claude 3.5 Sonnet matches 92% of GPT-4o’s accuracy at 40% lower cost. You don’t need the most accurate model unless your job demands it.

Do cache systems really save money?

Absolutely-if you’re doing repetitive tasks. Anthropic’s cache system gives 25-50% discounts on repeated queries. A company handling 50,000 customer FAQs a month with 60% repetition saved $9,000/month by switching to Claude Sonnet. But if your prompts change often, cache doesn’t help. Test your workflow before committing.

Should I use multiple providers?

Most enterprises already do. Using a cascade-like Llama 3 for simple queries, Claude Sonnet for medium tasks, and GPT-4o for high-stakes analysis-cuts costs by 60-80%. It’s not about picking one winner. It’s about matching the right tool to the job. Companies using multi-provider setups are 3x more likely to stay under budget.

How do I avoid wasting money on context windows?

Always trim your prompts. Don’t send full documents unless you need them. Use summarization tools to shorten input. Avoid padding prompts with filler like “Please think step by step.” A 2026 study found 68% of developers wasted 30-50% of their budget on unnecessary context. Start small. Add context only when accuracy drops.

Will prices keep dropping?

Yes. OpenAI, Anthropic, and Google are all under pressure from open-source models like Llama 3. Forrester predicts another 50% drop by Q4 2026. By end of 2026, GPT-4 quality could cost as little as $0.10-$0.15 per million tokens. If you’re not building flexible systems now, you’ll get left behind.