Large language models don’t learn like humans. They don’t read books with curiosity or ask questions when something doesn’t make sense. Instead, they stare at trillions of words pulled from the internet and guess what comes next-over and over again. That’s it. No teachers. No labeled examples. Just raw text, massive compute, and a simple rule: predict the next word. This is self-supervised learning, and it’s how models like GPT-4, Claude 3, and Llama 3 became so powerful.

What Self-Supervised Learning Actually Means

Think of it like filling in the blanks in a giant, messy textbook. You’re given a sentence like: "The cat sat on the ___" and asked to guess the missing word. The model doesn’t know the answer ahead of time. It doesn’t get told "it’s ‘mat’". Instead, it sees millions of similar sentences-"The dog sat on the porch," "The bird sat on the branch"-and slowly learns patterns. Over time, it starts to recognize that "mat" is far more likely than "giraffe" or "Tuesday". This is the core of self-supervised learning: the data itself provides the lesson. No human needs to label every sentence. No one has to say, "This is correct grammar," or "This is a fact." The model figures it out by noticing what usually appears together. It learns that "Paris" often follows "capital of," that "quantum computing" tends to appear near "qubits" and "superposition," and that "I am feeling" is usually followed by an emotion like "tired" or "excited." This method is radically different from older AI approaches. Back in the 2010s, models needed humans to manually tag data: "This email is spam," "This sentence is positive sentiment." That was slow, expensive, and limited. Self-supervised learning throws all that out. It uses whatever text is available-Reddit threads, Wikipedia pages, code repositories, news articles, forum posts, books scraped from the web. The more, the better.The Transformer: The Engine Behind the Learning

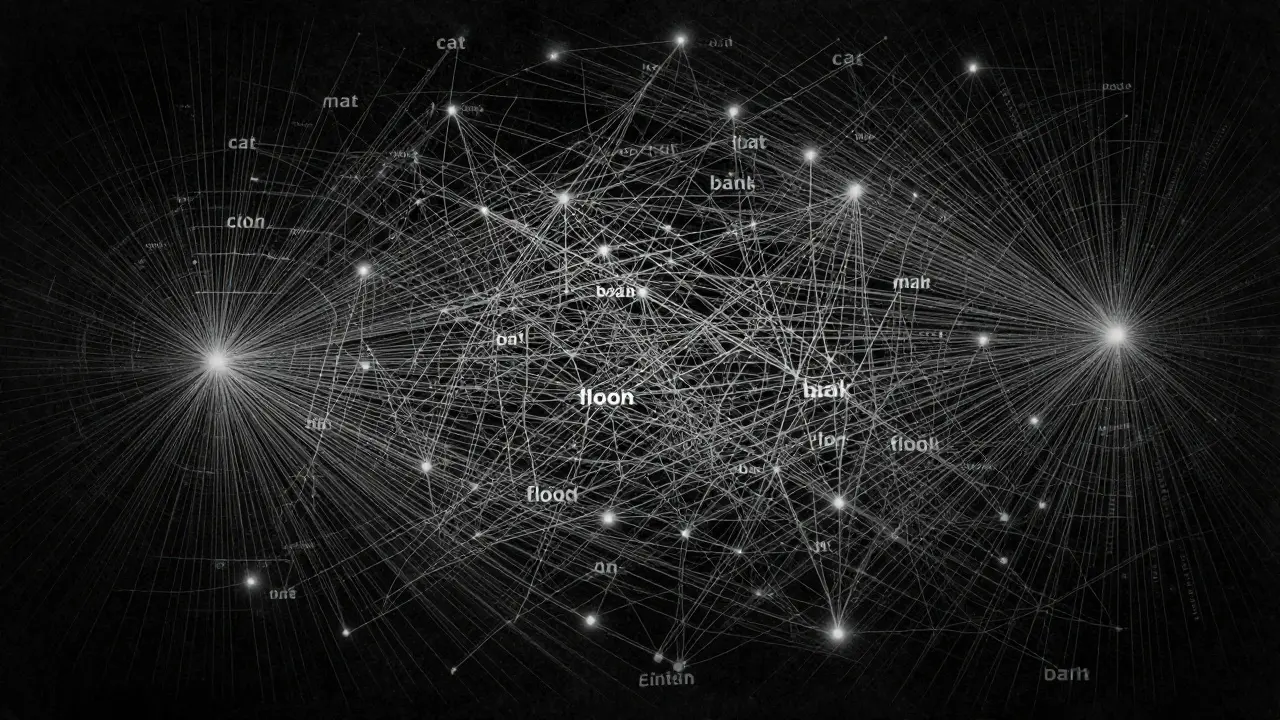

Self-supervised learning existed before, but it couldn’t scale. That changed in 2017 with the release of the Transformer architecture by Google researchers. Before Transformers, models processed text one word at a time, like reading a sentence left to right, remembering what came before. That made them slow and limited in how much context they could hold. The Transformer flipped that. It looks at the whole sentence at once. It doesn’t care about order in the old way-it assigns attention weights. So in the sentence "The bank was closed because of the flood," the model notices that "bank" is more likely tied to "flood" than to "money." It learns context through relationships, not sequence. This parallel processing is what made internet-scale training possible. Instead of waiting weeks to train on a billion words, you can now train on trillions in months using thousands of GPUs. Models like GPT-3 (175 billion parameters) and Llama 3 (405 billion parameters) rely entirely on this architecture. Without Transformers, self-supervised learning would still be stuck in the lab.How Much Data Are We Talking About?

Let’s put scale into perspective. GPT-3 trained on about 300 billion tokens. A token can be a word, part of a word, or even punctuation. Llama 3? It trained on 15 trillion tokens. That’s 50 times more. To give you an idea: the entire English Wikipedia contains about 4 billion words. Llama 3’s training data is equivalent to reading the entire English Wikipedia 3,750 times. This data isn’t clean. It’s messy. It includes typos, memes, conspiracy theories, broken HTML, outdated medical advice, and hate speech. The preprocessing step is brutal. Teams remove duplicates, filter out low-quality pages, block known spam domains, and normalize formatting. But even then, the model still sees it all. And that’s where problems start.What the Model Actually Learns

You might think LLMs memorize facts. They don’t-not exactly. They learn patterns of association. If the phrase "Einstein invented" appears next to "relativity" millions of times, the model learns to link them. But if you ask it "What did Einstein invent?" it doesn’t pull from a database. It generates a response based on probability: "relativity" is the most likely word to follow. This is why LLMs can sound smart but still get things wrong. They’re not reasoning. They’re predicting. They can write a convincing essay on climate change because they’ve seen thousands of them. But if you ask them a question about a recent event that didn’t appear in their training data-say, a policy change in June 2025-they’ll make something up. That’s called hallucination. Studies show that even the best models hallucinate factual claims about 42% of the time on tricky tests like TruthfulQA. That’s not a bug-it’s a feature of how they learn. They’re not designed to be truthful. They’re designed to be plausible.

The Hidden Costs: Compute, Energy, and Bias

Training these models isn’t just expensive-it’s environmentally heavy. GPT-3’s training released an estimated 552 metric tons of CO2. That’s like flying 60 round-trip flights from New York to London. Llama 3? It likely used over 15,000 petaflop/s-days of compute. That’s the equivalent of running 10,000 high-end gaming PCs nonstop for over a year. And then there’s bias. The internet is full of stereotypes, misinformation, and prejudice. When you train a model on that, it absorbs it. A 2023 study found that LLMs were far more likely to associate "nurse" with "female" and "engineer" with "male," even when the training data didn’t explicitly say that. It learned from repetition, not intent. Some companies try to fix this with "constitutional AI"-rules that tell the model to be harmless, honest, and helpful. But it’s like trying to scrub oil out of the ocean with a sponge. You can reduce it, but you can’t eliminate it without filtering the data before training-and that raises its own ethical questions.Why Self-Supervised Learning Beats the Alternatives

Why not just use supervised learning? Because labeling text at internet scale is impossible. Imagine paying someone $1 to label 10,000 sentences. To label 15 trillion tokens, you’d need $1.5 trillion. That’s more than the GDP of most countries. Unsupervised learning? It finds patterns without targets-like clustering similar news articles. But it doesn’t teach the model to predict what comes next. That’s the key. Self-supervised learning creates a clear, automatic target: the next word. That makes it far more effective for language. Today, every major LLM uses this method. Google’s Gemini. Anthropic’s Claude. Meta’s Llama. Microsoft’s Phi-3. Even open-source models like Mistral AI’s 7B model. It’s the industry standard because it works-at scale.What Comes After Pretraining?

Self-supervised learning gives models a foundation. But they’re not useful yet. A model that can predict the next word doesn’t know how to follow instructions like "Write a poem about the desert" or "Explain quantum physics like I’m 10." That’s where fine-tuning comes in. After pretraining, models go through supervised fine-tuning (SFT). Here, humans provide examples of good responses: "Q: What’s the capital of Australia? A: Canberra." The model learns to mimic those responses. Then comes reinforcement learning from human feedback (RLHF). Humans rank different outputs, and the model learns which answers feel more helpful, truthful, or aligned with human values. This is how models go from "text completion engines" to "chat assistants." But here’s the catch: most companies don’t train from scratch. They use open-source base models like Llama 3 and fine-tune them on their own data. A medical startup might fine-tune Llama 3 on 10,000 patient notes. A legal firm might train it on court rulings. That’s where real value is created.

Real-World Use and Limitations

Companies are already using these models in production. A 2024 O’Reilly survey found that 68% of businesses using LLMs fine-tuned them for internal tasks-like summarizing contracts, drafting emails, or answering IT helpdesk questions. One user on Reddit reported a 22% accuracy boost on medical queries after fine-tuning Llama 3 on hospital records. But the failures are just as common. A GitHub user complained that the base model gave wrong medical advice 30% of the time. Another reported that the model hallucinated product specs in a sales email. On G2, 67% of negative reviews cited "inconsistent factual accuracy." The truth? LLMs are powerful tools, but they’re not reliable sources. They’re like a brilliant intern who’s read every book in the library but has never stepped outside.The Future: What’s Next?

The next wave isn’t just bigger models. It’s smarter training. Researchers are exploring ways to reduce data waste-like using synthetic data or filtering out redundant text. Meta’s Llama 3 already improved multilingual performance by training on more non-English data. Google’s Gemini 1.5 Pro can process a million tokens at once-enough to read an entire novel in one go. But the big question remains: can we keep scaling without hitting diminishing returns? GPT-3 scored 52% on TruthfulQA. GPT-4 scored 58%. That’s progress, but it’s slow. Meanwhile, compute costs keep rising. Some experts believe we’re nearing a wall. The future may lie in combining self-supervised learning with other techniques-like grounding models in real-time data, or linking them to databases and tools. But for now, predicting the next word remains the engine driving everything.Self-supervised learning at internet scale didn’t just change AI. It changed how we think about learning itself. Machines don’t need to be taught. They just need to be fed-and given enough time to notice the patterns.

How do large language models learn without human labels?

They use self-supervised learning, where the model predicts missing words in text based on context. For example, given "The sky is ___," it learns to predict "blue" because that word appears frequently in that position across billions of training examples. No human labels are needed-the structure of the text itself provides the supervision.

What’s the difference between self-supervised and supervised learning?

Supervised learning requires humans to label every training example-like tagging emails as spam or not. Self-supervised learning creates its own labels automatically by masking parts of the text and asking the model to predict them. This makes it scalable and cost-effective, reducing annotation costs by up to 95% compared to supervised methods.

Why do LLMs sometimes give wrong or made-up answers?

LLMs predict the most probable next word based on patterns in training data, not facts from a database. If a question is rare or the training data is ambiguous, the model generates a plausible-sounding answer even if it’s incorrect. This is called hallucination. It’s not a bug-it’s a consequence of how they’re trained.

Can I train my own large language model?

Technically yes, but it’s not practical for most people. Training a model like Llama 3 requires over 15,000 petaflop/s-days of compute, thousands of high-end GPUs, and months of engineering work. Most organizations use pre-trained open-source models (like Llama 3 or Mistral) and fine-tune them on their own data, which is far more feasible.

Is self-supervised learning the future of AI?

For now, yes. All leading LLMs use it, and industry analysts predict 80% of enterprises will rely on foundation models trained this way by 2026. While future models may combine it with multimodal data (images, audio) or real-time tools, the core idea-predicting what comes next from massive unlabeled text-will remain central for the foreseeable future.