When you ask a standard large language model (LLM) a simple question like "What’s the capital of Canada?", it spits out an answer in milliseconds. But when you ask it something complex-"Compare the long-term economic effects of carbon tax vs. cap-and-trade policies across OECD nations, factoring in inflation, political feasibility, and public compliance rates"-something very different happens. The model doesn’t just generate a response. It thinks. And that thinking comes at a price.

These new models, called Large Reasoning Models (LRMs), were developed between 2023 and 2024 by teams at Anthropic, Google DeepMind, and Meta AI to solve problems that traditional LLMs couldn’t handle reliably. Unlike older models that guess answers based on patterns, LRMs break down hard problems into steps: generate a hypothesis, refine it, evaluate alternatives, backtrack when wrong, and finally select the best path. This structured reasoning sounds powerful-and it is. But every step adds computational weight. And that weight translates directly into cost.

How Much Does Thinking Cost?

Standard LLMs use about 1-2 FLOPs per parameter per token. That’s already heavy. LRMs? They can jump to 3-10 times that. Why? Because each reasoning step isn’t just one token. It’s multiple tokens. A single Backtrack or Evaluate action might add 5-15 extra tokens to the output. For a complex legal analysis, a 70-billion-parameter LRM might consume 2,500 tokens just to reason through the problem. A standard LLM would handle the same question in 800 tokens. At Anthropic’s pricing of $0.0001 per 1,000 tokens, that’s $0.25 versus $0.08. A 212% cost increase for one query.

It gets worse. The cost doesn’t scale linearly. It grows exponentially. A 5-step reasoning chain costs about 4.2x more than a direct answer. A 10-step chain? 12.7x more. That’s not just more tokens-it’s more memory, more GPU cycles, more time spent maintaining internal state. The University of Pennsylvania found that LRMs need 40-60% more GPU memory than standard models just to hold their reasoning traces. And energy? SandGarden’s August 2025 data shows complex reasoning queries use 0.85 watt-hours per query. Simple ones? 0.22. That’s nearly 4x the power.

When Does It Pay Off?

LRMs aren’t universally better. They’re better for specific tasks. On simple facts-"Who won the 2024 Nobel Prize in Physics?"-LRMs are wasteful. They still run their full reasoning pipeline, even when it’s unnecessary. Emergent Mind’s Q3 2025 data shows 63% of routine business queries processed through LRMs incurred a 4.7x cost premium over standard models. That’s like using a jet engine to power a bicycle.

But flip the script. For high-stakes, multi-step reasoning? LRMs win. Anthropic’s internal metrics from May 2025 found that for complex analytical tasks-like modeling the ripple effects of a new tax law-LRMs achieved 28-42% higher accuracy and lower cost per unit of accuracy. Why? Because standard LLMs often get it wrong, then retry. LRMs get it right the first time, by design. The break-even point? Around 17-23 reasoning steps per task, according to SandGarden’s September 2025 whitepaper. If your use case needs that many steps, LRMs save money. If it doesn’t? You’re bleeding cash.

Real-World Cost Surprises

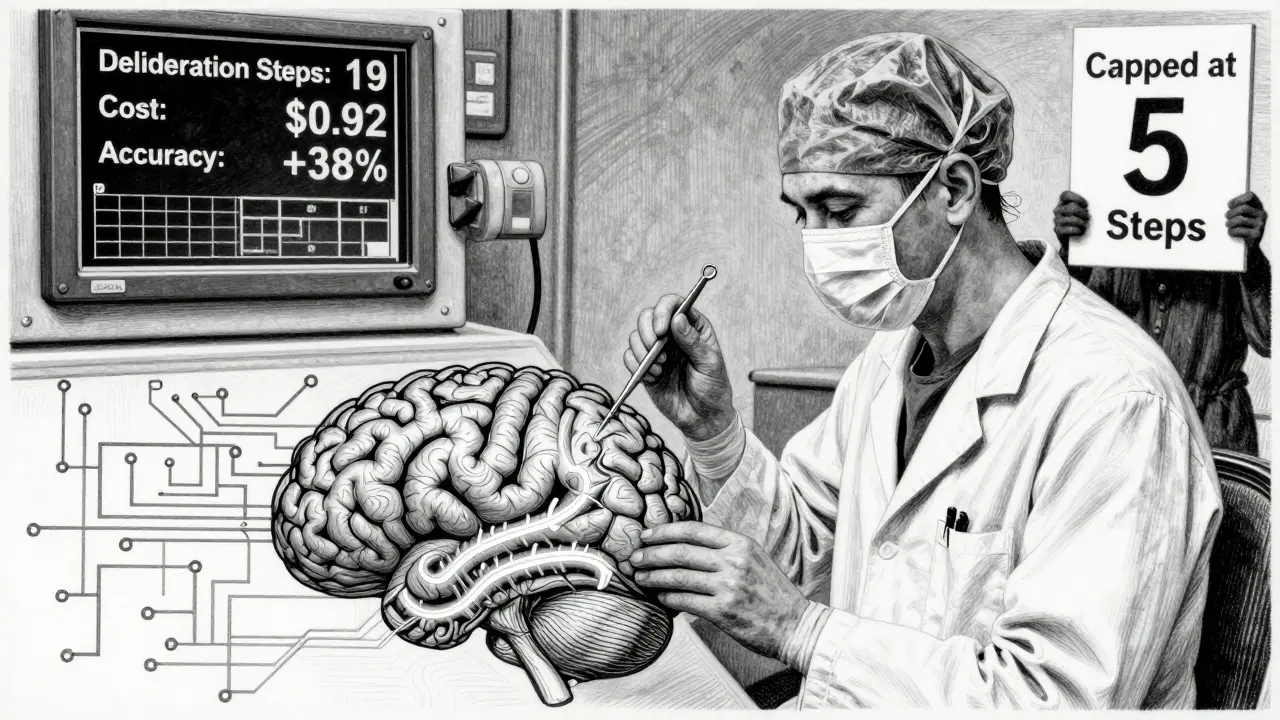

Companies didn’t expect how fast costs could spiral. A senior AI engineer at a Fortune 500 financial firm reported spending $287,000 per month on LRM operations before implementing controls. After setting limits on reasoning depth, they dropped to $92,000-keeping 92% of their accuracy. Another team on GitHub cut costs by 68% using "deliberation budgeting," where each query gets a fixed number of reasoning steps based on its type.

But the worst cases? Uncontrolled reasoning loops. One Reddit user reported a single query consuming $17.30 in compute costs because the model kept generating new sub-steps, never reaching a conclusion. That’s more than some people pay for a full month of cloud services. A CTO on Trustpilot shared that one earnings analysis query cost $214-more than their entire monthly budget for that service line.

Gartner’s Hype Cycle for AI Infrastructure labeled LRMs as being in the "Peak of Inflated Expectations" in August 2025. Their data? 73% of enterprises that deployed LRMs without cost controls saw their AI budgets spike 40-220% within six months. This isn’t a bug. It’s a design flaw if left unchecked.

Optimizing the Cost of Thought

There are ways to fix this. The best approach is hybrid deployment: use standard LLMs for simple queries, and reserve LRMs for tasks that truly need deep reasoning. According to IEEE’s November 2025 survey of 317 organizations, 76% of enterprises already do this. Google’s December 2025 best practices recommend:

- 3-5 steps for factual analysis

- 5-8 steps for strategic planning

- 8-12 steps for complex policy evaluation

After that, returns drop sharply. Professor David Kim of Stanford found that beyond five steps, the marginal cost of each additional step exceeds 80% inefficiency. You’re not gaining accuracy-you’re just burning more electricity.

Tools are emerging to help. Anthropic’s Reasoning Cost Dashboard (released October 2025) lets teams monitor per-query deliberation costs in real time. Microsoft’s Azure Reasoning Optimizer (November 2025) cuts costs by 52% by dynamically choosing which reasoning operators to use. Meta’s Llama-Reason 3.0 (December 2025) introduced "reasoning compression," maintaining 95% of accuracy at 40% of the previous cost. And by Q1 2026, the "Adaptive Reasoning Budget" framework will automatically adjust resource allocation during a query based on real-time cost-benefit analysis.

Who Should Use LRMs?

LRMs aren’t for everyone. They’re for organizations that:

- Handle high-stakes, multi-step decision-making (e.g., financial modeling, legal risk assessment, clinical diagnostics)

- Can afford the upfront infrastructure (a single-node LRM server with 8 NVIDIA RTX 5090-32G GPUs costs $128,000)

- Have engineers who can build cost-monitoring pipelines

- Are willing to invest 8-12 weeks learning how to control reasoning depth

Financial services (41% adoption), healthcare (33%), and government policy (29%) are the top adopters. Why? Their problems are too complex for guesswork. But even there, success depends on strict limits. One healthcare analytics company saw a 41% accuracy boost in diagnostic reasoning-despite 3.2x higher costs-because they only used LRMs for cases flagged as "high complexity."

The EU’s September 2025 AI Cost Transparency Directive now requires providers to disclose detailed reasoning cost structures. That’s a good sign. It means the industry is finally treating deliberation cost like a first-class metric-not an afterthought.

The Future: Cheaper Thinking

The good news? Costs are coming down. The AI Infrastructure Consortium forecasts a 65-75% reduction in deliberation costs over the next 18 months. New hardware, smarter algorithms, and better control systems are making reasoning more efficient. But the path forward isn’t universal reasoning. It’s selective reasoning. The models that win won’t be the ones that think the hardest-they’ll be the ones that think just enough.

Think of it like a surgeon. You don’t want them to operate with their eyes closed. But you also don’t want them to run a full-body scan on every patient who comes in with a sprained ankle. The best doctors know when to dig deep-and when to keep it simple.

Are internal deliberation costs the same across all LRM implementations?

No. Costs vary widely based on architecture. Models using token-efficient reasoning, like FoReaL-Decoding, cut deliberation costs by 37% compared to naive chain-of-thought approaches. Open-source frameworks like ReasonGPT often lack optimization, while commercial systems from Anthropic and OpenAI include built-in cost controls. The way operators are chained, how state is stored, and whether the model can prune unnecessary steps all affect cost.

Can I use LRMs for customer service chatbots?

Only for complex cases. For 90% of customer queries-"Where’s my order?", "How do I reset my password?"-a standard LLM is faster and cheaper. Reserve LRMs for edge cases where the customer needs multi-step analysis, like resolving a billing dispute involving overlapping policies. Using LRMs universally for chatbots will inflate your costs by 4-5x with little gain in satisfaction.

What’s the biggest mistake companies make with LRMs?

Deploying them without limits. Dr. Marcus Johnson of DeepMind warned that "uncontrolled reasoning expansion" is the #1 cost risk. Without step limits, timeout rules, or complexity classifiers, models can loop endlessly or generate dozens of unnecessary steps. One company reported costs jumping 2,000% on a single query because the model kept refining a simple answer. Always cap reasoning depth and monitor traces.

How do I know if my task needs an LRM?

Ask: "Does this require comparing alternatives, tracing cause-effect chains, or evaluating uncertainty?" If yes, and it takes more than two steps to solve, an LRM may be worth it. If it’s a single lookup or pattern match, stick with a standard LLM. Use the 17-23 step break-even threshold from SandGarden as a guide: if your average task needs fewer than that, you’re likely overspending.

Is there a way to reduce LRM costs without sacrificing accuracy?

Yes. Three proven methods: (1) Use hybrid architectures-standard LLMs for simple queries, LRMs only for complex ones; (2) Implement deliberation budgeting with dynamic step limits based on query classification; (3) Adopt new models like Llama-Reason 3.0 or Azure Reasoning Optimizer that compress reasoning without losing quality. Companies using these methods report 40-68% cost reductions with no drop in accuracy.