When a machine learning model starts underperforming in production, the problem isn’t always the code. It could be that the training data changed six months ago, and no one noticed. Or maybe the model was never properly documented, and now no one knows which version is live. Or worse - the model was supposed to be retired last quarter, but it’s still making loan decisions for thousands of customers. These aren’t edge cases. They’re everyday failures in organizations that treat models like static software.

Model Lifecycle Management (MLM) isn’t optional anymore. It’s the backbone of trustworthy AI. And at its core are three non-negotiable practices: versioning, deprecation, and sunset policies. Skip any one of them, and you’re gambling with compliance, reputation, and customer trust.

Versioning: It’s Not Just About Code

Most teams think versioning means tagging a model file with a number like v1.2.3. That’s like saying you’ve managed a car’s maintenance just because you changed the oil once. Real versioning in ML tracks everything that affects how a model behaves.

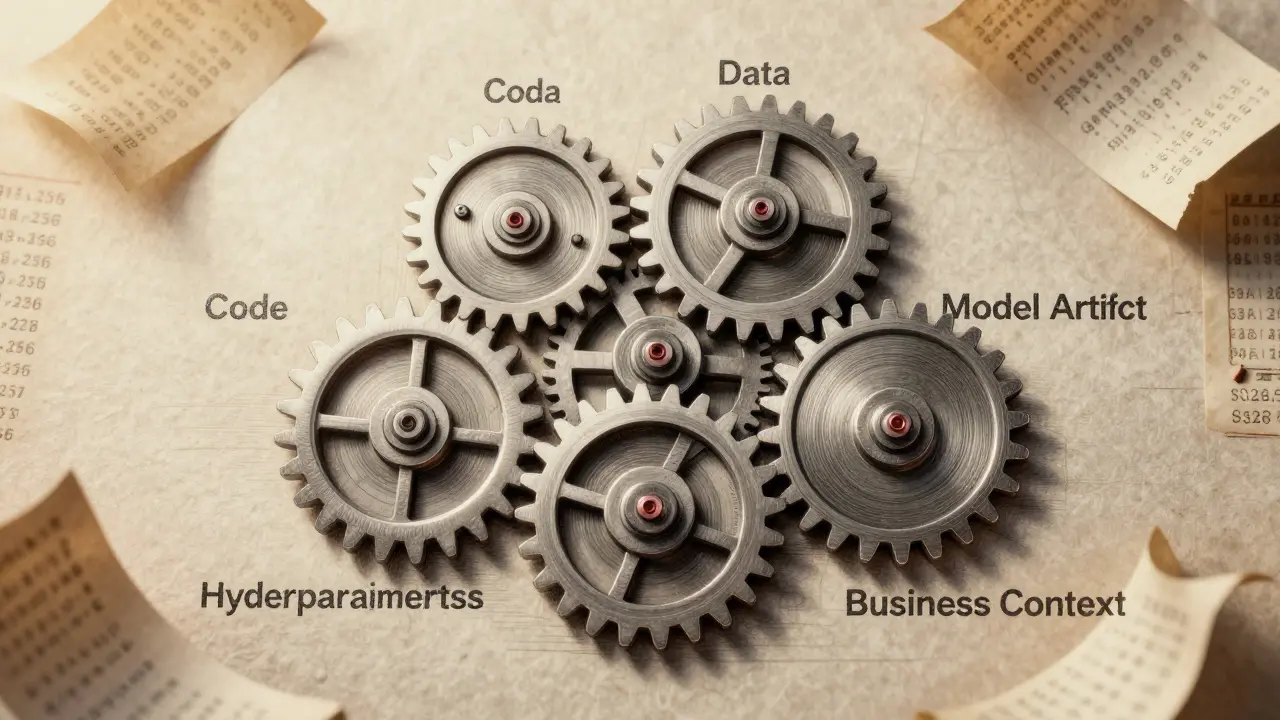

Every time you retrain a model, you’re changing at least five things: the code, the training data, the hyperparameters, the environment (like Python version or GPU type), and the performance metrics. If you don’t capture all of them, you can’t reproduce results - and you can’t debug failures.

Enterprise platforms like ModelOp and Domino Data Lab track six dimensions of versioning. Open-source tools like MLflow? They cover maybe half. That’s why teams using MLflow alone report 68% of their debugging time spent chasing data drift - not model bugs.

Here’s what real versioning includes:

- Code version: Git commit hash or pipeline script version

- Data version: SHA-256 checksum of the training dataset, down to the exact rows used

- Model artifact: The saved weights or parameters, stored with cryptographic integrity

- Hyperparameters: Learning rate, batch size, regularization - everything passed to the trainer

- Evaluation metrics: Accuracy, precision, AUC, with confidence intervals

- Business context: Who owns it? What problem does it solve? Which regulatory rule applies?

Capital One cut model rollback time from 47 minutes to 82 seconds by automating version promotion. They didn’t just tag models - they tied each version to a specific business outcome and a compliance requirement. That’s versioning with purpose.

Deprecation: When a Model Stops Being Supported

Not every model needs to live forever. But if you don’t plan for its death, it becomes a zombie - running silently, making bad decisions, and dragging down your system.

Deprecation means: "This model is no longer the recommended version. Use the next one instead." It’s not a deletion. It’s a transition plan.

Here’s how it works in practice:

- You release a new model version with better performance or lower bias.

- You mark the old version as "deprecated" in your registry.

- You notify all teams using it - data scientists, engineers, product owners.

- You give a clear timeline: "This version will stop serving traffic on June 30, 2025."

- You monitor usage. If someone’s still calling it after the deadline, you alert them.

Open-source tools like GitHub’s model registry let you keep every version forever. Sounds safe? It’s not. One company had 142 versions of a single fraud detection model. No one knew which one was active. That’s version sprawl - and it’s a compliance nightmare.

Regulated industries don’t have that luxury. Under FINRA Rule 4511, financial firms must keep audit trails for seven years. But they also must stop using outdated models. The solution? Keep the old versions for audit, but lock them down so they can’t be re-deployed. ModelOp and Seldon do this automatically. Most open-source tools? They don’t.

Deprecation timelines vary by risk:

- High-risk (healthcare diagnostics, credit scoring): 30-90 days

- Medium-risk (recommendation engines, chatbots): 90-180 days

- Low-risk (internal analytics): 180-365 days

The Partnership on AI recommends context-based timelines. McKinsey says 90 days for everything. The truth? It depends on your use case. But whatever you pick - document it. Enforce it. And never let a deprecated model run in production past its date.

Sunset Policies: The Final Cut

Deprecation says: "Don’t use this anymore." Sunset says: "This model is gone. It’s been deleted. It’s not coming back."

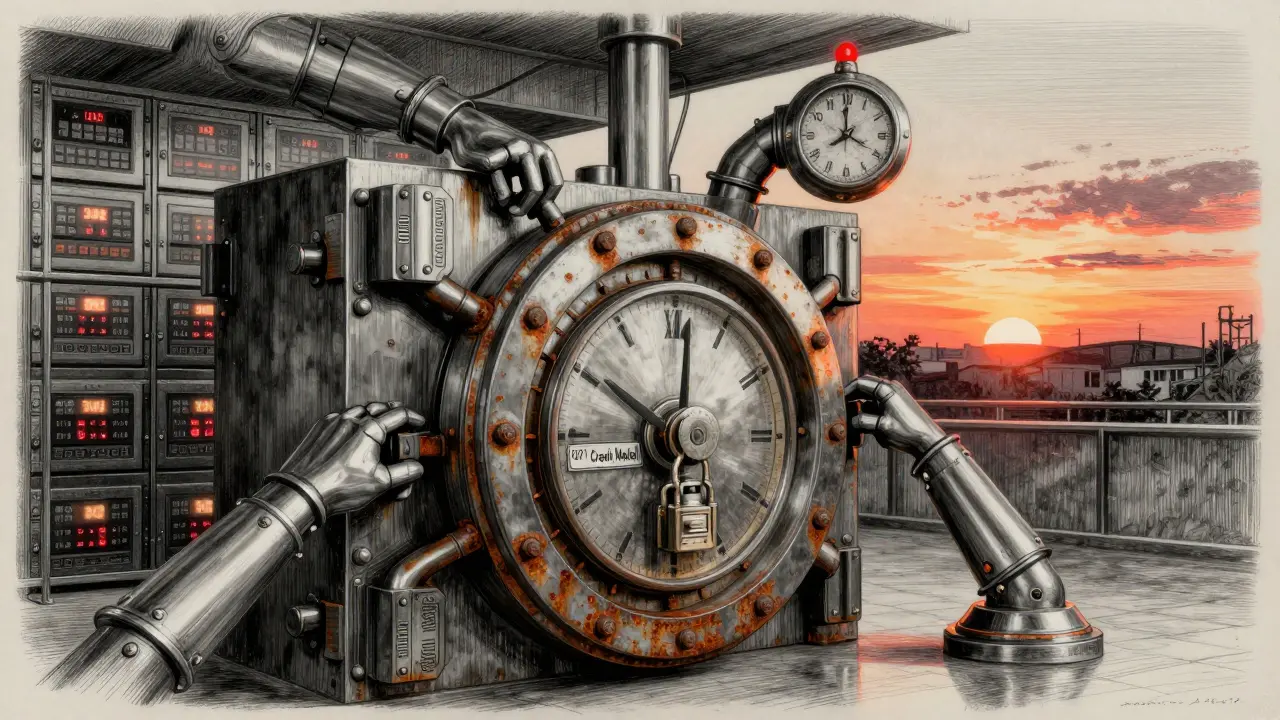

This is where most companies fail. They deprecate, but never sunset. The model sits in storage, unused, but still accessible. Someone finds it. They tweak it. They redeploy it. And suddenly, a model from 2021 is deciding who gets a mortgage.

Sunset policies are legally binding. In healthcare, FDA SaMD guidelines require that models be removable within 15 minutes of performance degradation. In finance, the EU AI Act demands that models be retired if they violate fairness thresholds. If you can’t delete or disable a model on demand, you’re not compliant.

Enterprise platforms now automate this. AWS’s Model Registry Sunset Workflows (May 2024) let you set a hard cutoff date. At midnight, traffic shifts to the replacement model. The old one goes into read-only mode. After 30 days, it’s archived. After 7 years, it’s purged - all without manual intervention.

Open-source tools? Only 22% have any automated sunset capability. That’s why 41% of users on Capterra say their sunset workflows require "excessive manual intervention." Manual processes fail. Humans forget. Systems break.

UnitedHealth’s 2022 incident - where a biased model affected 2.3 million patients for 114 days - happened because no one knew which version was live, and no one had a process to kill it. That’s what happens when sunset policies are an afterthought.

Today, 89% of enterprise MLM platforms enforce automated sunsets. Only 22% of open-source tools do. The gap isn’t just technical - it’s legal.

Why This Matters More Than You Think

Model Lifecycle Management isn’t about tech. It’s about accountability.

Think about it: if a car’s brakes fail, you can trace it to the mechanic, the part, the inspection date. With AI, you can’t - unless you’ve built the same traceability.

Companies with strong MLM practices see:

- 37% fewer production incidents (MIT Technology Review)

- 42% fewer compliance violations (Forrester)

- 3.2x higher ROI on AI investments (Gartner)

And they’re not just avoiding disasters. They’re moving faster. When you know exactly which version is running, and why, you can experiment with confidence. You can roll back instantly. You can prove your model is fair, accurate, and compliant.

Dr. Jennifer Prendki put it best: "Without rigorous versioning that captures not just the model but its entire context, you’re building on sand."

What You Should Do Today

You don’t need a fancy platform to start. But you do need a plan.

Here’s a simple checklist to begin:

- Choose one model to pilot your MLM process. Start small - a recommendation engine or internal tool.

- Track every version with at least: code, data, metrics, and owner.

- Set a deprecation date for the current version. Put it in your calendar.

- Define a sunset date - 180 days after deprecation. Write it down.

- Automate the alert - use a simple script or Slack bot to notify teams when a model is about to expire.

Don’t wait for a regulatory fine or a public scandal. Start today. The cost of building this system is less than the cost of fixing it after something goes wrong.

Tools That Help

You don’t have to build this from scratch. Here’s what’s working in 2025:

| Tool | Versioning Depth | Deprecation Support | Sunset Automation | Best For |

|---|---|---|---|---|

| MLflow (open-source) | Low (code + metrics) | Manual only | No | Small teams, research |

| AWS SageMaker | Medium (model package level) | Manual tagging | Yes (since May 2024) | AWS users, moderate compliance needs |

| ModelOp | High (6 dimensions) | Automated schedules | Yes, with legal hold | Finance, healthcare, regulated industries |

| Domino Data Lab | High (6 dimensions) | Automated schedules | Yes, with audit retention | Enterprise AI teams |

For most teams in regulated industries, open-source tools aren’t enough. The risk isn’t worth the savings.

What’s Coming Next

The NIST AI 100-4 guidelines, expected in late 2024, will require federal contractors to implement minimum versioning standards. The EU AI Act is already enforcing this. By 2027, 92% of analysts predict mandatory versioning in all regulated sectors.

Deprecation timelines will get tighter. Gartner says models will be retired in 90 days by 2026. Forrester says they’ll last longer - 270 days - because companies are learning that frequent changes cause more disruption than outdated models.

The real winner? Organizations that treat models like regulated products - not experiments.

Versioning isn’t a feature. It’s a foundation. Deprecation isn’t a warning. It’s a policy. Sunset isn’t a cleanup task. It’s a legal obligation.

If you’re not doing this, you’re not managing AI. You’re just hoping it works.

What’s the difference between deprecation and sunset in model lifecycle management?

Deprecation means a model is no longer recommended for use and should be replaced - but it’s still active and accessible. Sunset means the model is permanently retired, taken offline, and often deleted or archived. Deprecation is a warning; sunset is the final removal.

Why can’t I just keep all model versions forever?

Keeping every version leads to "version sprawl," where teams can’t tell which model is live, increasing the risk of using outdated or biased models. It also creates compliance risks - regulators require you to retire models that no longer meet standards. Storage costs and audit complexity also balloon. Most enterprises retain only the top 3 performing versions plus the baseline.

Do I need a paid platform to manage model versions properly?

For small teams or research projects, open-source tools like MLflow can work. But if you’re in finance, healthcare, or government - or if your model affects real people - you need an enterprise platform. Open-source tools lack automated deprecation, sunset enforcement, audit trails, and role-based access control required by regulators.

How do I know when to deprecate a model?

Deprecate when a new version shows statistically significant improvement in accuracy, fairness, or efficiency - or when the old model starts drifting (performance drops over time). Also deprecate if regulatory requirements change, or if the model’s data source is no longer valid. Never wait for a failure to trigger the decision.

What happens if I don’t sunset a model?

You risk non-compliance with laws like the EU AI Act or FDA SaMD guidelines. You may face fines, legal action, or reputational damage. More commonly, you’ll accidentally reuse an outdated model - leading to biased decisions, customer harm, or operational failures. UnitedHealth’s 2022 incident, where a biased model affected 2.3 million patients, happened because no one sunset it.