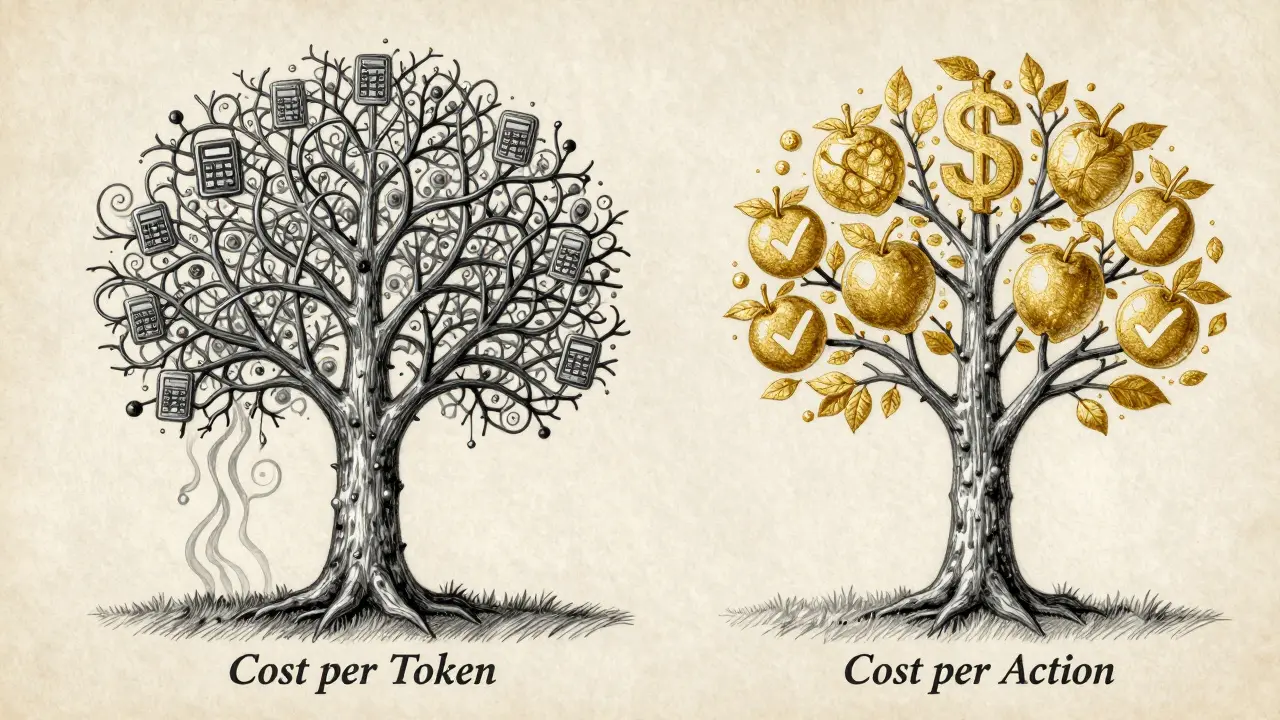

When you use an LLM like GPT-4 or Claude, you’re not paying for a conversation. You’re paying for tokens-tiny chunks of text the model processes. But what if you could just pay for the result instead? Like paying $5 to get a contract reviewed, not $0.03 for every 1,000 words you type in? That’s the difference between cost per token and cost per action. One is technical. The other is business-friendly. And right now, most companies are stuck with the first one-whether they like it or not.

How Cost per Token Actually Works

Cost per token is the default pricing model for every major LLM API: OpenAI, Anthropic, Google, and others. You’re charged for every token your prompt uses and every token the model generates. A token isn’t a word. It’s often a piece of a word. In English, 1,000 tokens roughly equals 750 words. But it’s not consistent. "Don’t" is one token. "Unbelievable" might be three. Numbers and punctuation count too.Here’s the catch: input and output tokens cost different amounts. Anthropic’s Claude Sonnet 4.5 charges $3 per million input tokens but $15 per million output tokens. That’s a five-times difference. Why? Because generating text requires the model to predict each token one after another-slow, compute-heavy work. Reading your prompt? That’s faster, parallelizable. So the math isn’t fair, and it’s not intuitive.

And then there are "reasoning tokens." These are internal steps the model takes to think through a problem-like working out a legal argument or solving a math equation. Anthropic and others charge extra for these, often at higher rates than output tokens. A user on Reddit reported their bill jumped 5x after processing legal documents because the model was using dozens of reasoning tokens per response. They didn’t even know these existed until their invoice arrived.

For a legal tech startup processing 1,000 contracts a month, each averaging 10,000 input tokens and 500 output tokens, the monthly cost is around $37.50. Sounds low? Now imagine 10,000 contracts. Or 100,000. Or 1 million. Costs scale fast. And if your output suddenly goes from 500 tokens to 5,000 because the model over-explains? Your bill triples overnight.

Why Cost per Token Feels Like a Trap

The biggest complaint from business teams? Unpredictability. You can’t budget for it. You don’t know how long a summary will be. You don’t know if the model will get stuck in a loop. You don’t know if it’ll add 200 extra tokens of fluff just to sound thorough.Traceloop surveyed 247 enterprise AI teams in April 2025. 68% said their biggest cost challenge was tracking token usage across features, users, and departments. They needed to know: "Is this $1,200 bill coming from customer support chats? Or from internal reports?" But token data doesn’t map to business outcomes. It maps to code.

Developers can optimize. They can rewrite prompts to be shorter. They can use caching so repeat questions don’t cost anything. One SaaS company cut their monthly LLM bill from $1,200 to $380 just by trimming prompts and reusing responses. But that’s not something a marketing manager or paralegal can do. They just want a contract summary. They shouldn’t need a computer science degree to get one.

And the documentation? It’s messy. OpenAI’s cost guide rates 4.2/5 on Capterra. Anthropic’s? 3.8. That gap isn’t about quality-it’s about clarity. One explains how tokens work. The other doesn’t tell you why your bill spiked.

What Cost per Action Could Look Like

Cost per action flips the script. Instead of paying for tokens, you pay for a task. Contract review: $5. Summarize a 50-page report: $3. Extract all names and dates from an email: $1. No counting. No surprises.This isn’t science fiction. Jasper.ai rolled out "Content Generation Packs" in April 2025: $20 for 10 blog posts. Harvey AI launched "Legal Task Units" in May: $12 per contract reviewed. These aren’t experiments-they’re products. And they’re selling.

The upside? Budgeting becomes simple. A legal team can say, "We allocated $5,000 for contract reviews this quarter," and know exactly how many they can process. No one needs to monitor API logs. No one needs to learn what a "token" is. The finance team doesn’t need to justify a $1,200 spike-they just see $12 per task.

But there’s a flip side. If your contract is short and simple, you still pay $12. If the model gets it right in 200 tokens, you still pay $12. If it takes 2,000 tokens to get it right? Still $12. For the provider, that’s risky. For the customer, it’s a win-unless your task is complex and the model fails. Then you pay again.

That’s why per-action pricing only works for well-defined tasks. You can’t say "write me a novel" and expect a fixed price. But "extract all dates from this invoice"? That’s perfect. "Classify this support ticket as billing, technical, or account"? Perfect.

Which Model Fits Your Use Case?

Here’s how to pick:- Use cost per token if: You’re building something exploratory. You need flexible outputs. You’re testing ideas. Your prompts vary wildly. You have engineers who can optimize prompts and monitor usage. Startups and developers often fall here.

- Use cost per action if: You’re automating repetitive, well-defined tasks. You have non-technical teams using the system. You need predictable monthly costs. You’re in legal, HR, healthcare, or compliance. Enterprises with high-volume, low-variability workflows prefer this.

Google’s Vertex AI Model Optimizer is trying to bridge the gap. You don’t pick a model. You pick an outcome: "Low cost," "High accuracy," or "Balanced." The system picks the best model and pricing behind the scenes. It’s not per-action yet-but it’s moving that way.

The Future: Hybrid Models Are Coming

Per-token pricing isn’t going away. Not yet. 92% of LLM providers still use it as their main model, according to Grumatic’s March 2025 data. But the tide is turning.Why? Because costs are dropping. Andreessen Horowitz found per-million-token prices fell 68% from late 2023 to early 2025. That means providers can afford to experiment. They can start bundling. They can offer flat-rate tiers for common tasks.

Look at what’s happening: Anthropic now lets you set "effort controls"-limit how many reasoning tokens the model uses. That’s not per-action, but it’s a step toward outcome-based pricing. Providers are learning that customers don’t care about tokens. They care about results.

By 2028, Binadox predicts 35% of enterprise LLM usage will run on per-action or hybrid pricing. Legal, healthcare, and finance will lead the shift. Why? Because those industries need audit trails, fixed budgets, and compliance. Tokens don’t give you that. Task pricing does.

What You Should Do Right Now

If you’re using LLMs today:- Track your token usage. Use tools like Traceloop or Langfuse. Know your cost per task, even if you’re on token pricing.

- Look for providers offering fixed-price task bundles. Jasper and Harvey aren’t the only ones-check what Anthropic and Google are testing.

- Ask your vendor: "Can I pay per contract review, not per token?" If they say no, ask when they’ll offer it.

- For simple, repeatable tasks, start building your own "action" layer. Write a script that says: "If input is this, output is that, cost is $X." It’s not perfect, but it’s better than guessing.

The goal isn’t to avoid tokens. It’s to stop letting them control your budget. The best LLM strategy isn’t about finding the cheapest model. It’s about aligning your pricing model with your business goals. Tokens are the engine. Outcomes are the destination. Stop paying for the engine. Start paying for the ride.