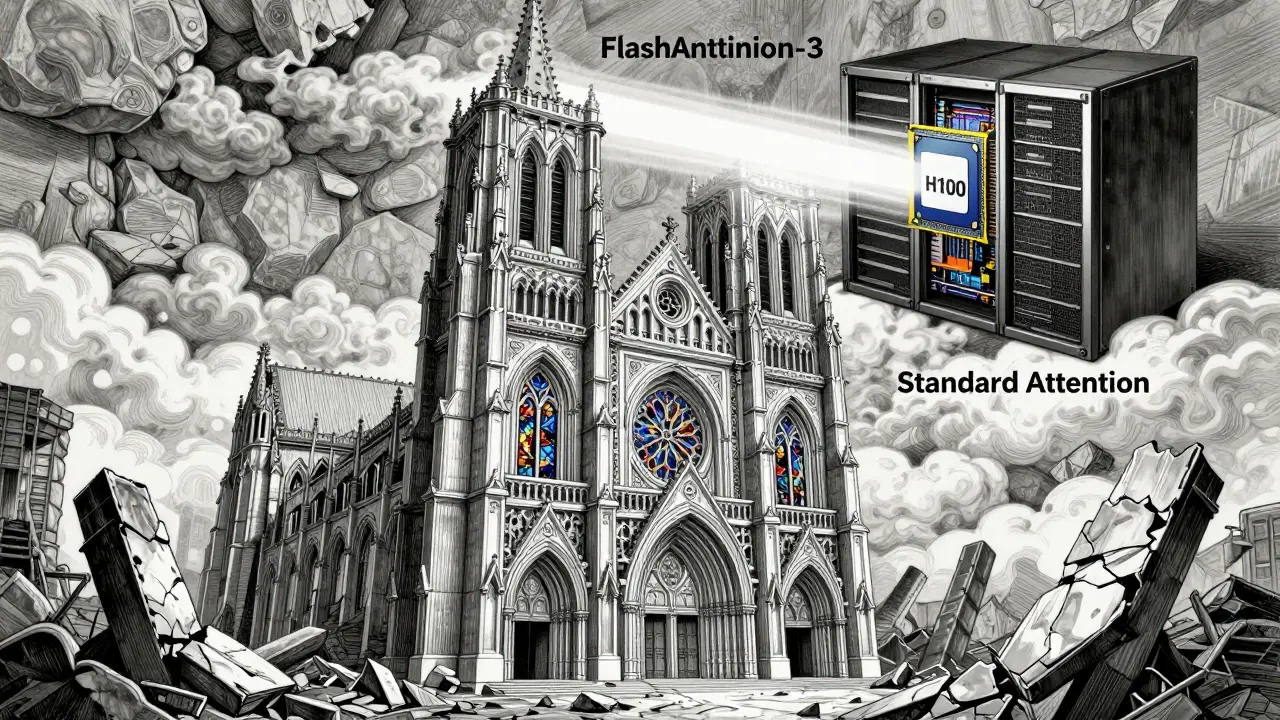

Imagine running a large language model with a 32,000-token context window-like reading an entire novel in one go-without your GPU crashing from memory overload. That’s not science fiction. It’s happening right now, thanks to Flash Attention. Before this innovation, transformer models hit a hard wall: the attention mechanism, which lets models weigh the importance of every word in a sequence, used so much memory that longer texts were impossible. Flash Attention changed that. It didn’t just tweak the math-it rewrote how the hardware talks to the model.

Why Standard Attention Breaks Down

Standard attention in transformers works by computing a matrix where every token pays attention to every other token. For a sequence of 2,048 tokens, that’s over 4 million attention scores. At FP16 precision, that’s 8 GB of memory just for the attention matrix. And it gets worse: for 8,192 tokens, you’re looking at 128 GB. Most consumer GPUs have 24 GB. Enterprise cards like the A100 have 80 GB. Even then, you’re barely scraping by. The real killer isn’t just storage-it’s movement. Every time the GPU needs to compute a new attention score, it has to pull data from high-bandwidth memory (HBM) into faster, smaller on-chip memory (SRAM). With standard attention, you’re doing this millions of times per sequence. That’s like driving to the grocery store for every single ingredient instead of buying everything in one trip. The result? Slow training, tiny context windows, and models that can’t handle long documents, codebases, or legal contracts.How Flash Attention Fixes the Bottleneck

Flash Attention doesn’t change what the model learns. It changes how the hardware does the math. Instead of loading and unloading data over and over, it loads the entire chunk of queries, keys, and values into SRAM once-then does all the calculations there. Think of it like bringing all the ingredients into your kitchen, cooking everything on the stove, and only washing the dishes once. The magic happens in three steps:- Tiling: The attention computation is split into small blocks (like 128×128) that fit perfectly into SRAM. On an A100, this means you can process 1K tokens without touching HBM again until the block is done.

- Recomputation: Instead of storing the full attention matrix, Flash Attention recalculates intermediate values on the fly. This cuts memory use from O(n²) to O(n).

- Kernel Fusion: Multiple operations-softmax, scaling, dropout-are merged into one GPU kernel. No extra data transfers. No wasted cycles.

Flash Attention vs. Alternatives

You might have heard of other tricks to handle long sequences: linear attention, sparse attention, or memory-efficient transformers. But here’s the catch: most of those are approximations. They trade accuracy for speed. Flash Attention doesn’t. It’s mathematically identical to standard attention. The outputs are the same. The model’s performance doesn’t drop. | Method | Memory Use | Speed | Accuracy | Hardware Needs | |--------|------------|-------|----------|----------------| | Standard Attention | O(n²) | Slow | Exact | Any GPU | | Flash Attention | O(n) | 2-4× faster | Exact | NVIDIA Ampere+ | | Linear Attention (Performer) | O(n) | Fast | Approximate | Any GPU | | Sparse Attention | O(n log n) | Medium | Approximate | Any GPU | If you care about accuracy-like in legal, medical, or code-generation tasks-Flash Attention is the only choice. Approximations might work for chatbots, but they fail when precision matters.

Who’s Using It-and Why

Flash Attention isn’t just a research paper. It’s in production. Meta uses it in Llama 3. Anthropic relies on it for Claude 3. Mistral AI built its entire model family around it. Hugging Face added native support in Transformers 4.30-just setattn_implementation='flash_attention_2' and it auto-detects compatible hardware.

Enterprise users report real savings: Anthropic cut training costs by 18-22% per run. Lambda Labs measured a 37% drop in energy use per billion tokens trained. That’s not just faster-it’s cheaper and greener. With the EU AI Act’s new energy rules taking effect in January 2026, this isn’t optional anymore. It’s compliance.

Even small developers benefit. One Reddit user cut their Llama-2 7B training memory footprint by 43% on an A100. Another got 8K context working on a single RTX 4090-something that used to crash at 2K. Flash Attention is leveling the playing field.

Limitations and Gotchas

It’s not perfect. Flash Attention only works on NVIDIA GPUs with Ampere architecture (A100, RTX 30xx) or newer. Hopper (H100) gets the best performance, especially with FlashAttention-3. But if you’re on an older GPU-like an RTX 2080 or V100-you’re out of luck. Even some Ampere cards, like the RTX 4090, have reported issues with FlashAttention-3, as noted in GitHub issues. It also struggles with variable-length sequences in the same batch. Most frameworks pad sequences to the same length, which wastes compute but keeps things stable. Custom attention masks (like for masked language modeling) are still limited compared to standard attention. And while it’s easy to turn on in Hugging Face, building custom kernels or modifying the underlying CUDA code? That’s for experts. Most users don’t need to. But if you’re optimizing for edge devices or non-NVIDIA hardware, you’re on your own-for now.

What’s Next: FlashAttention-3 and Beyond

The latest version, FlashAttention-3, launched in early 2024, takes advantage of NVIDIA’s Hopper architecture. It uses new features like Tensor Memory Accelerator (TMA) and asynchronous memory transfers to squeeze out even more speed. On H100 GPUs, it’s 1.3-1.7× faster than FlashAttention-2. Microsoft used it to train a model with a 1-million-token context window. That’s longer than most novels. The roadmap is clear: AMD support is coming (FlashAttention-4 is rumored for late 2026), and Google is prototyping INT4 quantization-aware versions. The goal? Make it work everywhere-without losing accuracy. For now, if you’re training or deploying LLMs, Flash Attention isn’t a luxury. It’s the baseline. Every major model released in 2025 uses it. Every benchmark submission since late 2023 includes it. If you’re not using it, you’re not just slower-you’re behind.How to Start Using It Today

You don’t need to write CUDA code. Here’s how to enable it:- Use a modern NVIDIA GPU (A100, H100, RTX 4090).

- Update your drivers to 535.86.05+ and CUDA to 11.8+.

- Install Hugging Face Transformers 4.30 or later.

- When loading your model, add:

attn_implementation="flash_attention_2".

Final Thoughts

Flash Attention solved a problem that had stalled progress in AI for years. It didn’t require bigger models or more data. It just made the existing math run faster and leaner. And because it’s exact, it didn’t sacrifice quality. That’s rare in optimization. The next frontier isn’t more parameters. It’s smarter memory use. Flash Attention proved that. As Stanford’s Christopher Ré put it, it solved the IO bottleneck for attention. Now the industry is building everything else on top of it. If you’re working with LLMs in 2026, you’re either using Flash Attention-or you’re using outdated tech. There’s no middle ground anymore.Does Flash Attention work on AMD GPUs?

No, not yet. Flash Attention is currently optimized for NVIDIA’s memory architecture and CUDA cores. While there are active efforts to port it to AMD (expected in FlashAttention-4 by late 2026), no stable, production-ready version exists as of early 2026. Users on AMD hardware must rely on alternative attention methods like linear attention or sparse attention, which are approximations and may reduce model accuracy.

Can I use Flash Attention with my RTX 4090?

Yes, but with caveats. Flash Attention 2 works reliably on RTX 4090 (Ada Lovelace architecture). FlashAttention-3, however, has known compatibility issues on some 40-series cards due to differences in memory controller design. If you’re using Hugging Face Transformers, it will fall back to standard attention if FlashAttention-3 fails. For best results, stick with FlashAttention-2 unless you’re on an H100.

Does Flash Attention improve inference speed or just training?

Both. Flash Attention speeds up training and inference equally because both rely on the same attention computation. For inference, this means faster response times and higher throughput-more requests handled per second on the same hardware. In production systems, this translates directly to lower latency and reduced cloud costs.

Is Flash Attention compatible with all transformer models?

Most, but not all. Models using standard causal or non-causal attention (like GPT, Llama, Mistral) work perfectly. Models with custom attention patterns-such as those with complex masking, relative position embeddings, or multi-query attention-may require updates to their code. FlashAttention 2.4 (October 2024) added broader Triton kernel support, improving compatibility with most modern architectures. Always test after enabling it.

Do I need to retrain my model to use Flash Attention?

No. Flash Attention is a computational optimization, not a model architecture change. It produces mathematically identical outputs to standard attention. You can enable it on a pre-trained model without retraining. Just reload the model with the correct attention implementation flag, and it will run faster and use less memory immediately.

Why is Flash Attention considered a breakthrough?

Because it solved the quadratic memory bottleneck in attention without sacrificing accuracy. Before Flash Attention, longer contexts meant either approximations (which hurt performance) or massive hardware costs. Flash Attention made long-context models feasible on existing hardware, enabling 32K+ token windows across industry models. It’s the reason models like GPT-4 Turbo and Claude 3 can handle entire books or codebases in one go.