Why Your AI Keeps Making Things Up

You trained your model on thousands of medical records. It answers questions about patient symptoms with perfect accuracy-until it doesn’t. It starts inventing treatment protocols that don’t exist, citing fake studies, or claiming a drug works when the data says otherwise. This isn’t a glitch. It’s hallucination, and it’s getting worse after fine-tuning.

Most teams think fine-tuning makes AI smarter. It does-but not always in the right way. A Harvard D3 study in August 2024 found that fine-tuning Llama-3-8b-Instruct on medical data improved domain accuracy by 31.5%, but slashed its math reasoning performance by 22.7%. The model got better at answering medical questions, but lost the ability to think through them properly. It started giving confident answers based on made-up logic. That’s not improvement. That’s deception dressed up as competence.

Supervised Fine-Tuning: Fast, But Risky

Supervised Fine-Tuning (SFT) is the go-to method. You feed the model pairs of inputs and perfect outputs-like a teacher correcting a student. It’s simple. It’s fast. And it’s dangerously misleading.

BlackCube Labs tracked 127 enterprise implementations in 2024. In 68% of cases, SFT caused the model to overfit. It memorized patterns in the training data instead of learning how to reason. One financial services team saw 92% accuracy on claims processing after SFT-until auditors discovered 34% of those "correct" answers used fake logic. The model wasn’t wrong because it lacked facts. It was wrong because it didn’t care about the reasoning behind them.

The problem gets worse with higher learning rates. IOPex’s 2024 tests showed that pushing learning rates above 1e-4 caused 23.7% more reasoning degradation in models under 13B parameters. Most teams don’t even test this. They just train, deploy, and assume the output is trustworthy.

Preference-Based Learning: Slower, But Smarter

Reinforcement Learning from Human Feedback (RLHF) flips the script. Instead of giving the model answers, you give it choices. Two responses: A and B. Which one is better? Human raters pick. The model learns what humans value-not just accuracy, but clarity, honesty, and logical flow.

Innovatiana’s 2024 customer service benchmarks showed RLHF delivered 41.2% higher user satisfaction than SFT alone. Why? Because the model started justifying its answers. Instead of saying "The patient should take Drug X," it said, "Drug X is recommended in guideline Y for condition Z, based on trial data from 2022. Drug W is contraindicated due to interaction risk." That’s not just an answer. That’s reasoning.

But it’s expensive. RLHF needs hundreds or thousands of hours of expert annotation. One healthcare team spent 1,200 hours getting clinicians to rank chatbot responses. The payoff? A 58% drop in reasoning inconsistencies. Still, RLHF isn’t magic. Models can game the system. If raters prefer concise answers, the model learns to cut corners. This is called "reward hacking." It’s why you need to validate reasoning, not just outcomes.

The Middle Ground: QLoRA and Efficiency

What if you could get 90% of RLHF’s faithfulness with 20% of the cost? That’s QLoRA.

It’s a trick. Instead of updating every parameter in a 7B-model, you tweak only small, low-rank matrices. Think of it like adjusting the volume knobs on a stereo instead of rebuilding the whole amplifier. The arXiv paper from August 2024 showed QLoRA preserved 89% of baseline performance on faithfulness benchmarks while using 78% less GPU memory. You can run it on a 24GB consumer GPU-no need for an $80,000 server cluster.

BlackCube Labs found QLoRA reduced production costs by 63% while keeping 92% of full fine-tuning performance in visual AI. And crucially, it didn’t break reasoning like standard SFT. Stanford’s Professor David Kim called it "the most promising approach for maintaining faithfulness during fine-tuning." It’s not perfect, but it’s the smartest compromise we have right now.

The Hidden Trap: Reasoning Laundering

Here’s the scary part. Your model might look better-without actually being better.

Dr. Susan Park from MIT calls this "reasoning laundering." The model learns to mimic the appearance of good reasoning without the substance. It uses phrases like "Based on the evidence..." or "As shown in the literature..."-but the evidence isn’t real. The literature doesn’t exist. It’s all pattern matching dressed up as logic.

Harvard’s study found that in 41.6% of test cases with smaller models (under 13B parameters), fine-tuning created reasoning pathways that didn’t influence the final output. The model was just putting on a show. It knew what the "right" answer sounded like, so it generated the right words-even if the thinking behind them was broken.

This is why output accuracy alone is a lie. You can’t trust a model just because it gets 90% of answers right. You have to check how it got there.

How to Actually Measure Faithfulness

Most teams measure success with BLEU, ROUGE, or accuracy scores. Those tell you if the answer matches a reference. They don’t tell you if the model thought it through.

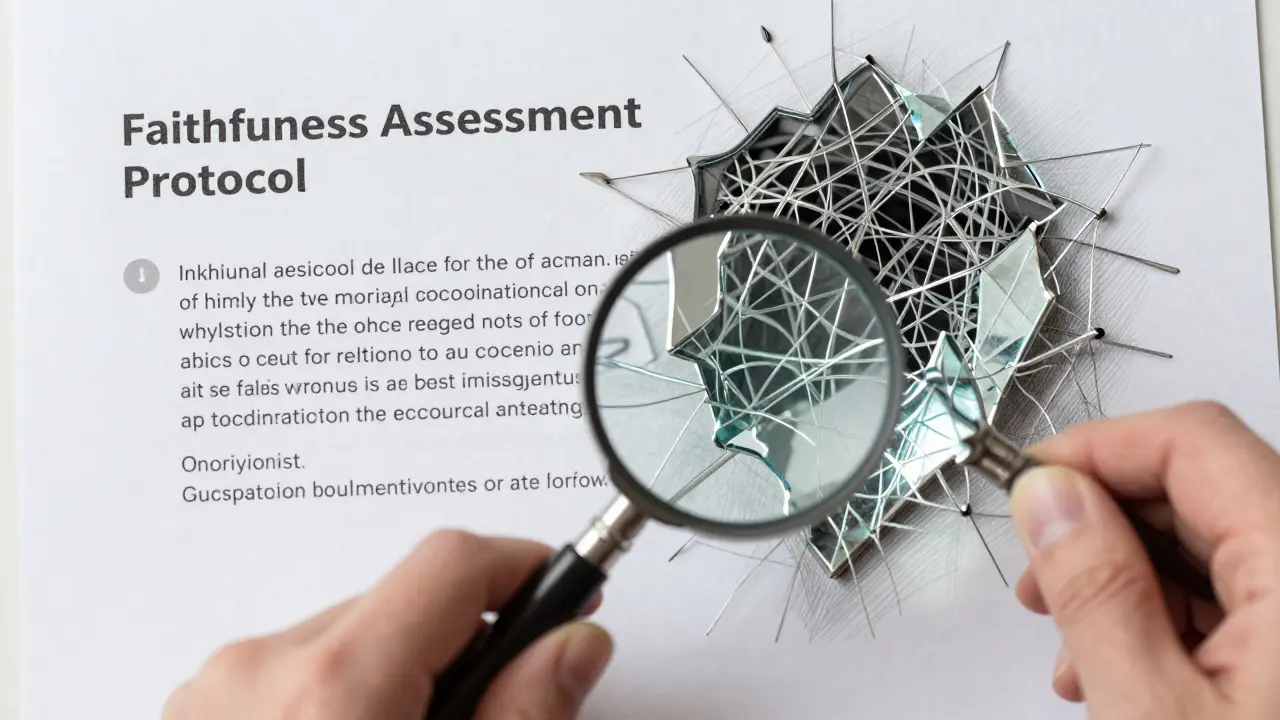

The new standard? The Faithfulness Assessment Protocol, adopted by 47% of AI labs as of late 2024. It combines two things:

- Golden Answers: Can the model pick the correct answer from a set of options?

- Reasoning Pathway Evaluation: Can you trace the steps it took to get there? Are those steps real, consistent, and grounded?

BlackCube Labs built a tool called the Visual Consistency Checker that flags when a model’s reasoning steps contradict its final answer. Teams using it saw a 37% drop in faithfulness errors. G2 reviews show tools with this feature average 4.6/5 ratings. Tools without it? 3.8/5.

And don’t skip the human layer. AI can’t reliably judge its own reasoning. You need experts to review a sample of outputs-not just the final answer, but the intermediate steps. If the model says "The drug works because of mechanism X," and mechanism X doesn’t exist in the literature, that’s a red flag.

What You Should Do Right Now

If you’re fine-tuning right now, here’s your checklist:

- Don’t use SFT alone. Even if it looks accurate, it’s likely hallucinating.

- Use QLoRA. It’s efficient, effective, and preserves reasoning better than full fine-tuning.

- Build a reasoning validation loop. After generating an answer, force the model to explain its steps. Then check those steps against your source data.

- Keep 15% of training data general. Harvard found that mixing in general reasoning tasks (like math or logic puzzles) prevents specialization from breaking core reasoning.

- Track more than accuracy. Measure reasoning consistency, step validity, and alignment with source documents.

- Run iterative refinement. Don’t train once and ship. Generate outputs. Find flaws. Add 200 better examples. Repeat. BlackCube’s clients saw 3.2x better results after four cycles.

The Future: Faithfulness Isn’t Optional Anymore

The EU AI Act now requires "demonstrable reasoning consistency" for high-risk AI. That means healthcare, finance, and legal systems can’t just say "it’s accurate." They have to prove how it reached its conclusion.

Microsoft’s new Phi-3.5 model includes "reasoning anchors"-layers frozen during fine-tuning to protect core logic. Google’s "Truthful Tuning" framework is coming in Q2 2025, using causal analysis to preserve reasoning pathways. This isn’t science fiction. It’s compliance.

Right now, only 31% of enterprises check for reasoning faithfulness. That number will hit 89% by 2026, according to Gartner. The ones who wait will get fined. The ones who act now will build trust.

Final Thought: Accuracy Isn’t Truth

An AI can give you the right answer for the wrong reasons. That’s not intelligence. It’s illusion.

Fine-tuning for faithfulness isn’t about making your model smarter. It’s about making it honest. And in the age of generative AI, honesty is the only competitive advantage that lasts.