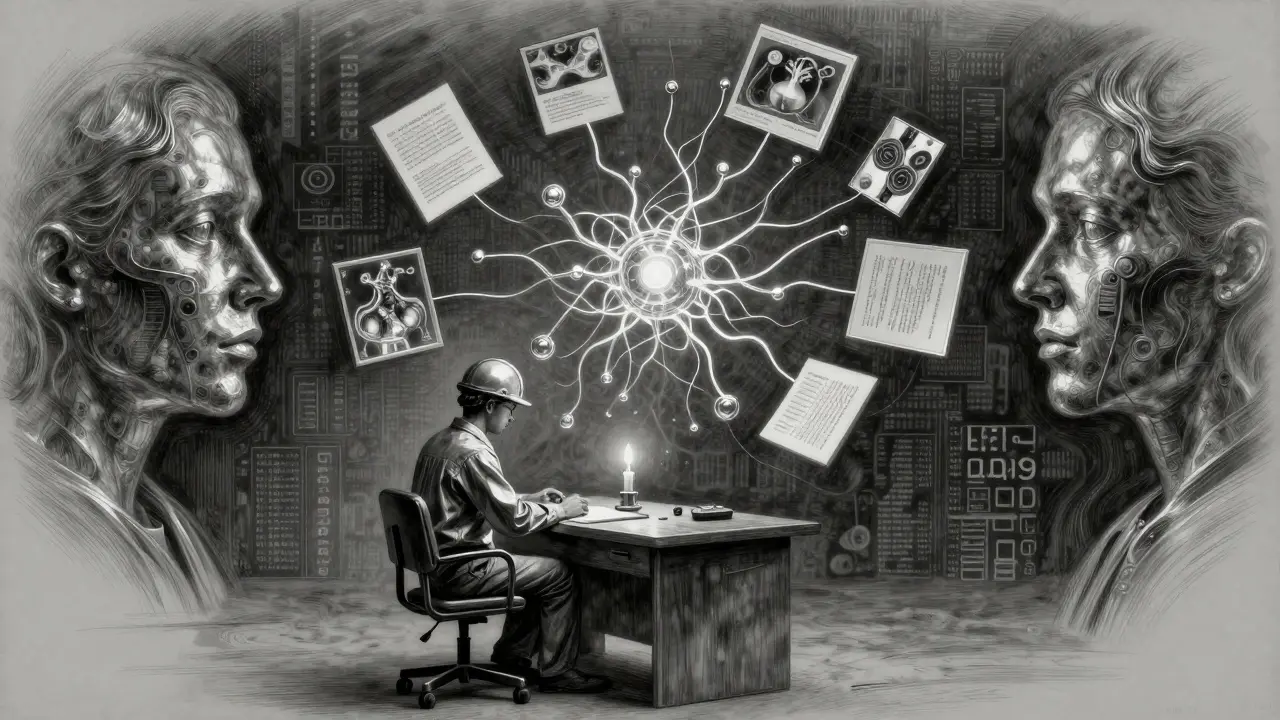

Most people think fine-tuning multimodal AI is just about feeding more data into a model. It’s not. The real challenge? Making sure the image and text actually understand each other. A model might see a photo of a skin lesion and read a diagnosis like "melanoma"-but if the model doesn’t know which part of the image matches which part of the text, it’s just guessing. That’s where dataset design and alignment losses come in. Get this right, and your model can spot tumors, detect factory defects, or generate accurate radiology reports. Get it wrong, and it’ll fail in production-even if it scores 95% on benchmarks.

Why Multimodal Fine-Tuning Isn’t Just Another Training Run

Pre-trained models like Google’s Gemma 3 or Meta’s Llama 3.2 can handle text, images, and sometimes audio. But they’re generalists. They’ve seen millions of image-text pairs from the internet. That’s great for chatting about cats or describing sunsets. Not so great when you need them to interpret X-rays, label industrial parts, or answer medical questions based on scans. Fine-tuning fixes that. You take the pre-trained model and retrain it on a small, tightly curated set of examples-say, 10,000 dermatology images paired with expert-written diagnostic notes. But here’s the catch: you can’t just slap an image next to a paragraph and call it a day. The model needs to know which pixels relate to which words. That’s alignment. Without proper alignment, the model might learn to associate the word "red" with any red object in the image, not the specific border of a suspicious mole. Or worse-it might ignore the image entirely and just memorize common phrases in the text. That’s why dataset design is more important than the model architecture in most real-world cases.What a Good Multimodal Dataset Actually Looks Like

A good multimodal dataset isn’t a folder of JPEGs and CSV files. It’s a structured conversation between modalities. Google’s production pipeline for Gemma 3 uses a format called extended chat_template. Each training example looks like this:- Image: A high-resolution dermoscopic photo of a skin lesion

- Text: "Patient is a 58-year-old male with a 3-month history of a changing mole on the left shoulder. Clinical impression: possible melanoma. Biopsy confirmed: invasive melanoma, Breslow thickness 2.1 mm."

- Metadata: Positional encoding for the image region, labeled as "lesion_region_001"

Alignment Losses: The Secret Sauce That Keeps Modalities in Sync

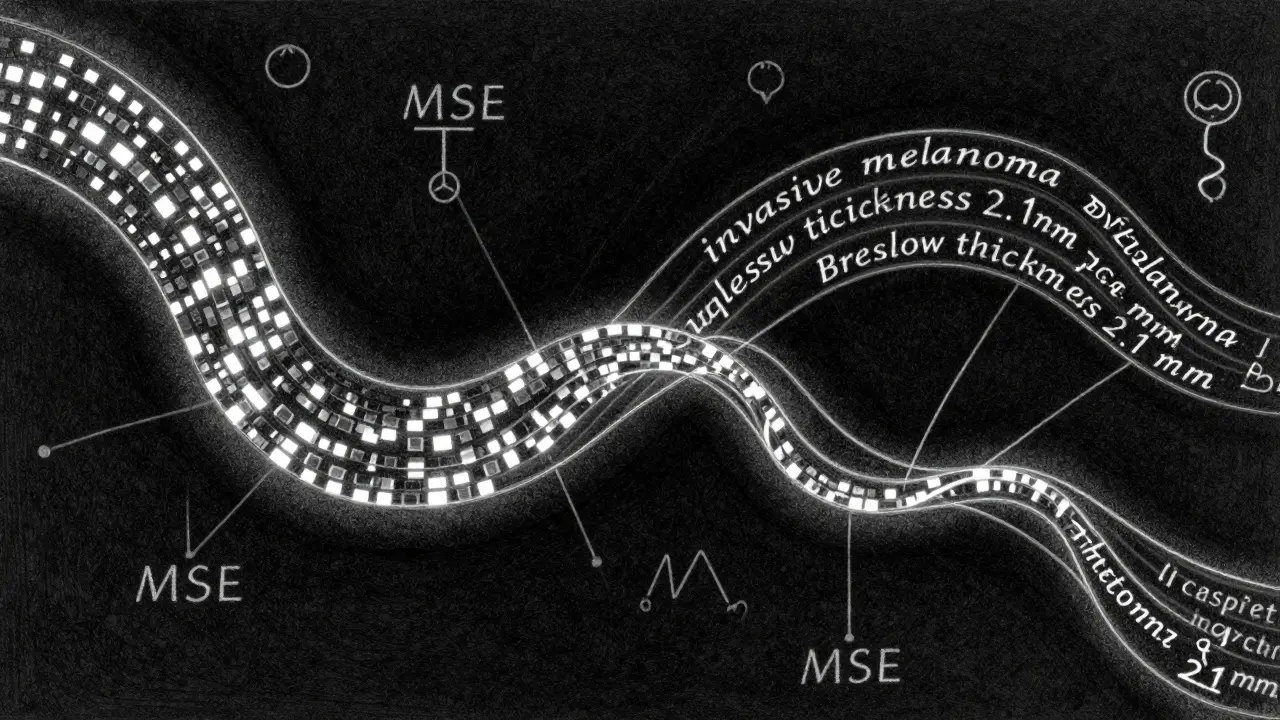

You can’t train a model to align modalities just by feeding it data. You need to tell it how to measure success. That’s where alignment losses come in. The most effective approach combines three loss functions:- Contrastive loss: Pushes matching image-text pairs closer together in embedding space, and pushes mismatched pairs apart. Temperature parameter τ=0.07 works best for most vision-language tasks.

- Cross-entropy loss: Ensures the model generates accurate text based on the image. Used for the language output head.

- Mean squared error (MSE): Aligns the visual embeddings from the image encoder with the text embeddings from the language model.

- Contrastive loss: Global relationship between image and text

- Cross-entropy: Accuracy of generated text

- MSE: Fine-grained matching of visual and semantic features

Parameter-Efficient Fine-Tuning: How You Do This Without a Supercomputer

Full fine-tuning a 7-billion-parameter model? That’s 8 A100 GPUs, $14,200 per run, and weeks of training. Not feasible for most teams. Enter parameter-efficient fine-tuning (PEFT). The two dominant methods are LoRA and QLoRA. LoRA (Low-Rank Adaptation) adds tiny trainable matrices to existing layers. Instead of updating millions of weights, you update just 0.5-0.8% of them. Microsoft Research showed this retains 98.7% of full fine-tuning accuracy across 17 tasks. It’s like adding a small, focused adjustment to a massive engine instead of rebuilding it. QLoRA takes it further. It combines LoRA with 4-bit quantization. You can now fine-tune a 65-billion-parameter model on a single consumer-grade NVIDIA RTX 4090 with 24GB VRAM. Google Cloud’s cost analysis shows QLoRA cuts training expenses by 87%-from $14,200 down to $1,850. But there’s a trade-off. QLoRA is slower-22% longer training time-because the model has to dequantize weights during training. LoRA is faster and slightly more accurate for specialized tasks. Adapters (another PEFT method) are better if you’re doing multiple tasks in sequence-they reduce catastrophic forgetting by 37.2%. As of Q3 2025, 73.5% of enterprise teams use PEFT. LoRA leads at 48.7% adoption, QLoRA at 26.5%, and Adapters at 24.8%. For most teams starting out, LoRA is the sweet spot.The Hidden Problems Nobody Talks About

Even with perfect datasets and loss functions, things go wrong. Modality dominance: Text often drowns out images. A model might learn to predict "cancer" just from the word "biopsy" in the text, ignoring the image entirely. Solution? Use different learning rates: 0.0002 for vision components, 0.0005 for text. This forces the model to pay more attention to the image. Bias amplification: Synthetic datasets or poorly sampled real data can make models worse. University of Washington’s AI Ethics Lab found multimodal fine-tuning on biased data amplified skin tone bias by up to 22.8% in dermatology models. If your training data has mostly light-skin images, the model will fail on darker skin. Mandatory bias testing is now required in the EU under the AI Act. Alignment drift: A model might perform perfectly on your test set but crash on real-world data. Google’s operational guide found 18-35% performance drops on out-of-distribution examples. That’s why you need ongoing monitoring and periodic re-fine-tuning. Reddit user Alex Rodriguez spent 37 hours debugging alignment issues before realizing his image-text pairs weren’t using positional encoding. GitHub issue #1784 on Axolotl shows the same problem-models fail to attend to visual elements because the cross-attention mechanism isn’t properly supervised.

What You Need to Start

You don’t need a PhD. But you do need:- PyTorch or TensorFlow expertise (92% of successful teams have this)

- Multimodal data engineering skills (87%)-knowing how to clean, encode, and validate image-text pairs

- Understanding of alignment losses (79%)-not just copy-pasting code, but tuning τ, balancing MSE and cross-entropy

The Future: Less Manual Work, More Intelligence

By 2027, AI will auto-generate 82% of multimodal training data. Google’s new Gemma 3.1, released January 2026, reduces needed training data by 35% through smarter alignment. Meta’s upcoming Llama 3.3 will support structured output fine-tuning-meaning you can train models to generate JSON reports from images, not just paragraphs. The big shift? From manual curation to automated alignment. Tools like Axolotl and Amazon Bedrock are making this accessible. But the core problem hasn’t changed: machines still don’t understand context the way humans do. Your job isn’t to train the model. It’s to teach it what matters.What Happens If You Skip This?

You’ll build a model that looks great on paper. It’ll score high on accuracy. But in production, it’ll miss tumors, mislabel parts, or generate dangerous medical summaries. You’ll blame the model. The truth? You didn’t give it the right data. You didn’t align the signals. And that’s on you. The best multimodal AI doesn’t have the biggest parameters. It has the cleanest data, the most thoughtful alignment, and the discipline to test for failure-not just success.What’s the difference between LoRA and QLoRA for multimodal fine-tuning?

LoRA adds small trainable matrices to a model’s layers, reducing trainable parameters to under 1% while keeping nearly all performance. QLoRA adds 4-bit quantization on top, letting you run billion-parameter models on a single consumer GPU. QLoRA cuts costs by 87% but takes 22% longer to train due to dequantization. Use LoRA for speed and precision; QLoRA for budget and scale.

Why is dataset alignment more important than model size?

A large model with bad alignment learns to guess based on text patterns, not image content. Google’s analysis found 68.3% of fine-tuning failures came from misaligned image-text pairs-not model architecture. Even a 7B model can outperform a 70B one if the data is perfectly aligned. The model needs to know which pixels match which words. Without that, it’s just memorizing.

Can I use synthetic data for multimodal fine-tuning?

Yes-but with caution. AI-generated image-text pairs can speed up data collection by 47-63%, as shown by Business Compass LLC. But they can also amplify biases. University of Washington found synthetic datasets increased skin tone bias by up to 22.8% in medical imaging. Always audit synthetic data for fairness, distribution gaps, and hallucinated details. Use real data as your anchor.

What loss functions should I combine for multimodal tasks?

Use three: contrastive loss (τ=0.07) to align image and text embeddings, cross-entropy to ensure accurate text generation, and mean squared error to match visual and textual feature spaces. AWS found this combo improves F1-scores by 18.3% over single-loss approaches. Don’t just pick one-each loss handles a different kind of alignment.

Do I need a GPU cluster to fine-tune multimodal models?

No. With QLoRA, you can fine-tune a 65B-parameter model on a single RTX 4090. Cloud platforms like Amazon Bedrock and Google Vertex AI also offer managed fine-tuning that hides the complexity. Full fine-tuning requires 8 A100s, but PEFT methods like LoRA and QLoRA make it feasible on a laptop. The barrier isn’t hardware anymore-it’s data quality and alignment knowledge.

How do I avoid bias in my multimodal dataset?

Start with stratified sampling: ensure equal representation across skin tones, age groups, or equipment types. Use fairness metrics like demographic parity and equalized odds. Test with out-of-distribution examples. The EU AI Act requires bias testing for healthcare applications, and for good reason-models trained on unbalanced data can miss diagnoses in underrepresented groups. Always audit before deployment.

What’s the biggest mistake people make when fine-tuning multimodal AI?

Assuming the model will figure out the alignment on its own. It won’t. You have to design the data structure (positional encoding, paired examples), choose the right loss functions, and monitor for modality dominance. The model doesn’t know what’s important-you do. Most failures aren’t technical; they’re conceptual. You didn’t teach the model what to pay attention to.