When developers started using AI to write code just by describing what they wanted - not writing it line by line - everything seemed faster. A feature that took days could now be spun up in hours. But after a few months, teams began hitting walls. Systems broke unexpectedly. Security scans lit up like Christmas trees. Refactoring became a nightmare. The problem wasn’t the AI. It was the lack of architecture.

Why Vibe Coding Needs Rules, Not Just Prompts

Vibe coding isn’t magic. It’s a new way of working where you tell an AI what you want - not just the function, but the vibe: "Make this feel smooth, fast, and professional," or "This should feel playful and responsive, like a mobile game." The AI then generates the code. Sounds great, right? But without structure, you’re not building software. You’re collecting fragments of code that might work today but collapse tomorrow. A team at Codecentric analyzed an AI-generated SaaS platform built with loose vibe coding. They found 37 critical security holes and 21 architectural anti-patterns. One module had 14 nested function calls. Another had 42% unused code. The folder structure didn’t follow any known convention. The AI didn’t know better. It was just trying to make something that ran. The truth? AI doesn’t care about maintainability. It doesn’t know what loose coupling means. It won’t think about scalability unless you tell it - and tell it again. And again.The Five Architectural Rules No Vibe-Coded System Can Ignore

If you’re using vibe coding - whether through GitHub Copilot, CodeWhisperer, or a custom AI agent - these five rules aren’t optional. They’re the minimum survival kit.- Composition over inheritance: Don’t build layers of classes that inherit from each other. Instead, build small, reusable behaviors - like "can authenticate," "can log," "can retry" - and plug them in where needed. Synaptic Labs found that teams using this approach had 87% of their object behaviors built from just 12 standardized capability interfaces. No deep hierarchies. No fragile base classes.

- Dependency injection with strict interfaces: Never let your AI-generated code directly instantiate other classes. Always pass dependencies in through the constructor. Use interfaces, not concrete types. One team saw coupling drop by 63% after enforcing this. That means when one part changes, ten others don’t break.

- Tell, don’t ask: Don’t let code reach into another object to check its state. Tell it what to do. "Save this record," not "Is the record dirty? If yes, then save it." This simple shift cut conditional logic by 41% in refactored systems. Fewer if-statements mean fewer bugs.

- Law of Demeter: No chaining. No

user.profile.settings.theme.color. That’s a disaster waiting to happen. Instead, create service interfaces that hide complexity. A single call likethemeService.getCurrent()replaces a 4.7-step chain. In compliant systems, that chain dropped to 1.2 steps on average. - AI-friendly design patterns: Be predictable. Use the same REST structure everywhere:

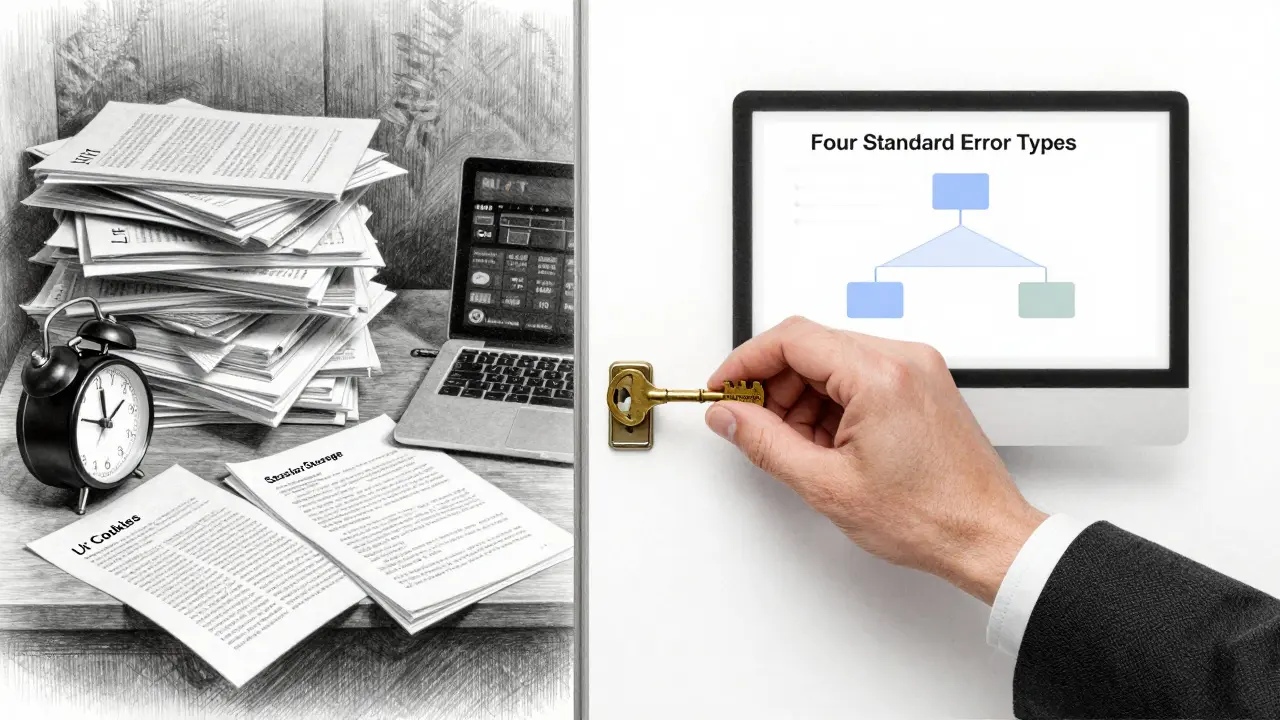

POST /items,GET /items/{id},PUT /items/{id},DELETE /items/{id},GET /items. Use four standardized error types:BadRequest,NotFound,Unauthorized,ServerError. Keep dependency graphs flat. When teams did this, AI extension success rates jumped from 58% to 92%.

Structured vs. Unstructured: The Data Doesn’t Lie

There are two kinds of vibe-coded systems: those built with discipline, and those built with hope. Drew Maring’s team used a specification-first approach. They wrote clear, structured prompts that included architectural constraints: "Use composition. No global state. All services must expose the same error format." Their system had 94% test coverage. Technical debt was 68% lower than average. Compare that to the unstructured approach - just typing "build me a dashboard that feels modern" and letting the AI run wild. The results? 73% of systems had strong coupling. 37 critical security issues per 1,000 lines of code. 42% of the codebase was dead weight. That’s not innovation. That’s technical rot. The difference isn’t subtle. Systems with architectural guardrails have 3.2x higher maintainability scores. They’re easier to extend, fix, and audit. They survive beyond the first sprint.The Reference Architecture: Four Parts That Make It Work

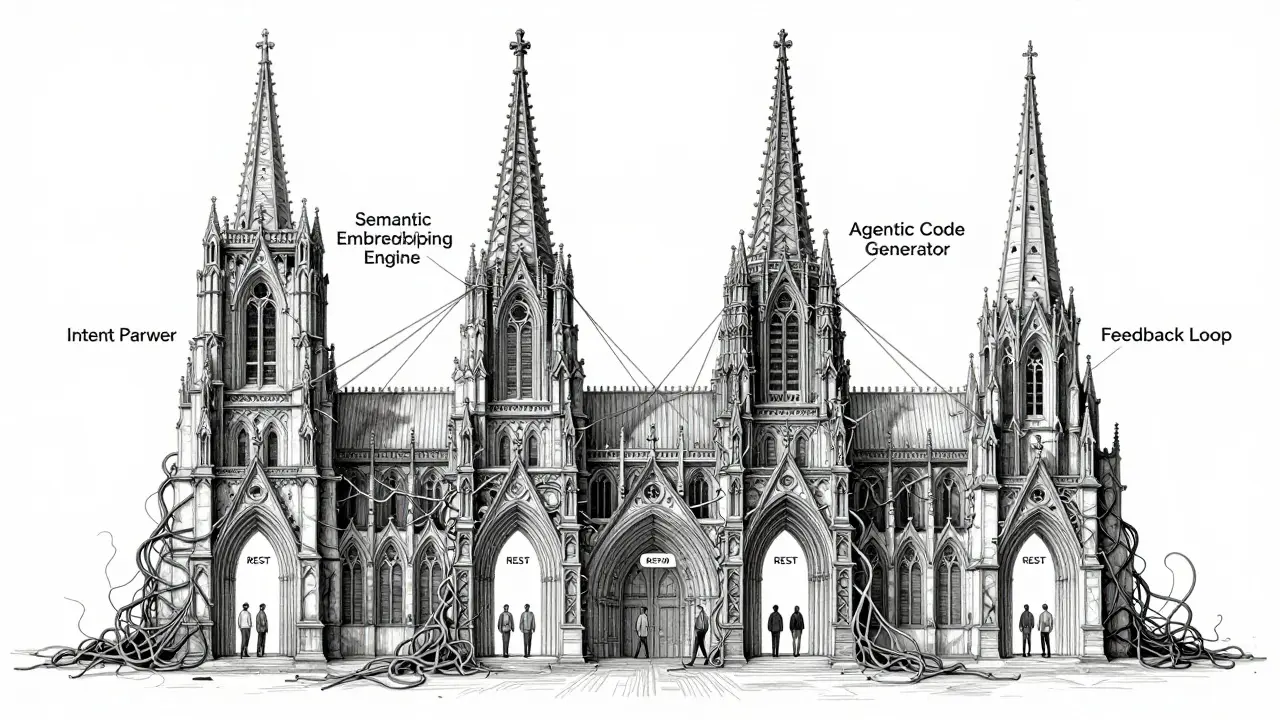

The October 2025 arXiv paper laid out the first formal reference architecture for vibe coding. It’s not theoretical. Teams are using it now.- Intent Parser: Takes your natural language description - "I need a user registration flow that’s fast and friendly" - and turns it into structured metadata: required fields, validation rules, tone, expected response time.

- Semantic Embedding Engine: Understands the vibe. "Friendly" doesn’t mean the same thing in banking as it does in a fitness app. This layer maps emotional descriptors to technical patterns: "friendly" → low friction, micro-animations, clear feedback, no jargon.

- Agentic Code Generator: Produces the actual code, but only if it passes the architectural rules. If the prompt says "use composition," the generator won’t spit out a 12-layer inheritance tree.

- Feedback Loop: After deployment, the system monitors performance, error rates, and user feedback. If the vibe drifts - say, users complain the app feels slow - the loop triggers a re-generation with updated constraints.

What Happens When You Skip the Rules

A Reddit user named CodeSlinger42 spent three weeks debugging an AI-generated app that kept crashing randomly. No one could figure out why. Then they adopted Synaptic Labs’ composition patterns. Debugging time dropped 76%. The problem? The AI had generated 14 different ways to handle user authentication. Some used cookies. Some used JWT. Some used session storage. No consistency. No testing. On Hacker News, ArchitectInChief shared how their team built a financial dashboard using Drew Maring’s constitutional method. New features were added with only 8 hours of refactoring per 1,000 lines of AI-generated code. Why? Because every component followed the same rules. Every API had the same structure. Every error was the same type. The cost of skipping structure? 4.7x more technical debt per month. That’s not a typo. It’s what happens when you trade short-term speed for long-term chaos.How to Start Getting It Right

You don’t need a team of architects. But you do need a plan. Start with a 3-phase onboarding:- Build your constitution: Write down 4-8 non-negotiable rules. Examples: "All services must be stateless," "No inline SQL," "All errors must use the four standard types." Spend 4-8 hours with your team. Get it on a whiteboard. Post it in Slack.

- Create reference implementations: For each core pattern - authentication, logging, error handling - write one perfect example. Not a template. A real, working, tested example. Save it in your repo. When the AI generates something that doesn’t match, flag it.

- Set up validation pipelines: Use tools like vFunction or custom linters to check every AI-generated commit. Does it follow the Law of Demeter? Is dependency injection used? Are error types consistent? Block the merge if it fails.

The Future Is Governed

Gartner predicts 55% of enterprise teams will use AI coding assistants by 2026. But here’s the catch: 90% of vibe-coded systems without architectural standards will need a full rewrite within three years. The EU’s AI Act now requires "architectural transparency documentation" for systems in healthcare and finance. Fortune 100 companies are already enforcing formal architecture reviews before deploying AI-generated code. The IEEE P2874 standard - currently in draft - will soon require minimum adherence to separation of concerns (85%), maximum coupling thresholds (0.35 fan-out), and mandatory security validation. This isn’t coming. It’s here. The teams that win aren’t the ones using AI the most. They’re the ones governing it the best. Vibe coding isn’t about writing less code. It’s about writing better code - with discipline, consistency, and clear boundaries.

What Happens When the AI Gets It Wrong?

The biggest myth is that AI will "learn" good architecture over time. It won’t. AI doesn’t learn from mistakes unless you show it, every single time. If the AI generates a class that directly accesses the database from the UI layer - which it will, unless you block it - you need a tool that catches it. A linter. A CI check. A team member who says, "This violates Principle 3. Fix it." You can’t outsource architecture to an AI. You have to enforce it.Who Should Use Vibe Coding?

Startups and innovation labs love vibe coding. They move fast. They don’t have legacy systems. But they also don’t have the budget to rebuild everything in six months. Regulated industries - healthcare, finance, government - are the ones adopting architectural standards fastest. They can’t afford breaches. They can’t afford technical debt. They’re using constitutional frameworks because they have to. If you’re in a high-risk domain, vibe coding is a tool - not a shortcut. Use it, but lock it down.Where to Learn More

The Vibe Architecture Guild, launched in January 2025, holds weekly reviews of real-world patterns. Synaptic Labs maintains a public repository of 37 validated architectural patterns for AI collaboration. Google’s Constitution-Based Approach documentation is publicly available and widely referenced. Don’t rely on random blog posts. Don’t copy-paste AI-generated code without review. The stakes are too high.Final Thought

Vibe coding is the future - but only if we build it with care. Speed without structure is just noise. The most powerful AI in the world can’t replace a good architect. But it can be a powerful assistant - if you tell it exactly what good looks like.What exactly is vibe coding?

Vibe coding is an AI-native programming approach where developers describe not just what a system should do, but also the desired tone, style, or emotional experience - its "vibe." The AI then generates code that matches both the functional requirements and the qualitative feel. For example, a prompt might say, "Build a checkout flow that feels fast and trustworthy," and the AI will generate code with micro-interactions, clear feedback, and minimal steps - not just working logic.

Do I still need architects if I use vibe coding?

Yes - maybe even more than before. AI doesn’t understand system design, scalability, or security by default. Architects define the rules - the "constitution" - that guide the AI. Without them, AI-generated code becomes chaotic. Successful teams have architects with 10+ years of experience who also understand prompt engineering and AI behavior.

Can I use vibe coding for legacy systems?

It’s risky. Vibe coding works best when you start fresh with clear patterns. Trying to generate new modules for a legacy system with inconsistent architecture often makes things worse. The AI will replicate existing anti-patterns. Better to isolate new features in a separate, well-structured microservice and connect it cleanly to the old system.

What tools support architectural governance in vibe coding?

GitHub Copilot and Amazon CodeWhisperer focus on code completion but offer little architectural control. For governance, use specialized tools like vFunction (for post-generation validation), Synaptic Labs (for structured collaboration), or build custom linters that enforce your team’s constitution. The IEEE P2874 standard, expected in 2026, will define required tooling features.

How do I stop my team from drifting away from the rules?

Make violations impossible. Use automated validation in your CI/CD pipeline. If a commit breaks a rule - say, it uses inheritance instead of composition - block the merge. Hold weekly reviews of AI-generated code. Celebrate when someone catches a violation. Turn governance into a team habit, not a one-time setup.

Is vibe coding secure?

Only if you make it secure. Unstructured vibe coding produces 3.7x more security vulnerabilities than manual coding. But with explicit security constraints - like "No direct database access," "All inputs must be sanitized," "Use only approved libraries" - the gap shrinks to 18%. Security isn’t an afterthought. It’s part of the initial prompt.

What’s the biggest mistake teams make with vibe coding?

Thinking speed equals success. Teams get excited because they can generate a working prototype in hours. But when maintenance time explodes - because of poor structure, tight coupling, or hidden bugs - the cost skyrockets. The real win isn’t faster code. It’s code that lasts, scales, and doesn’t break.