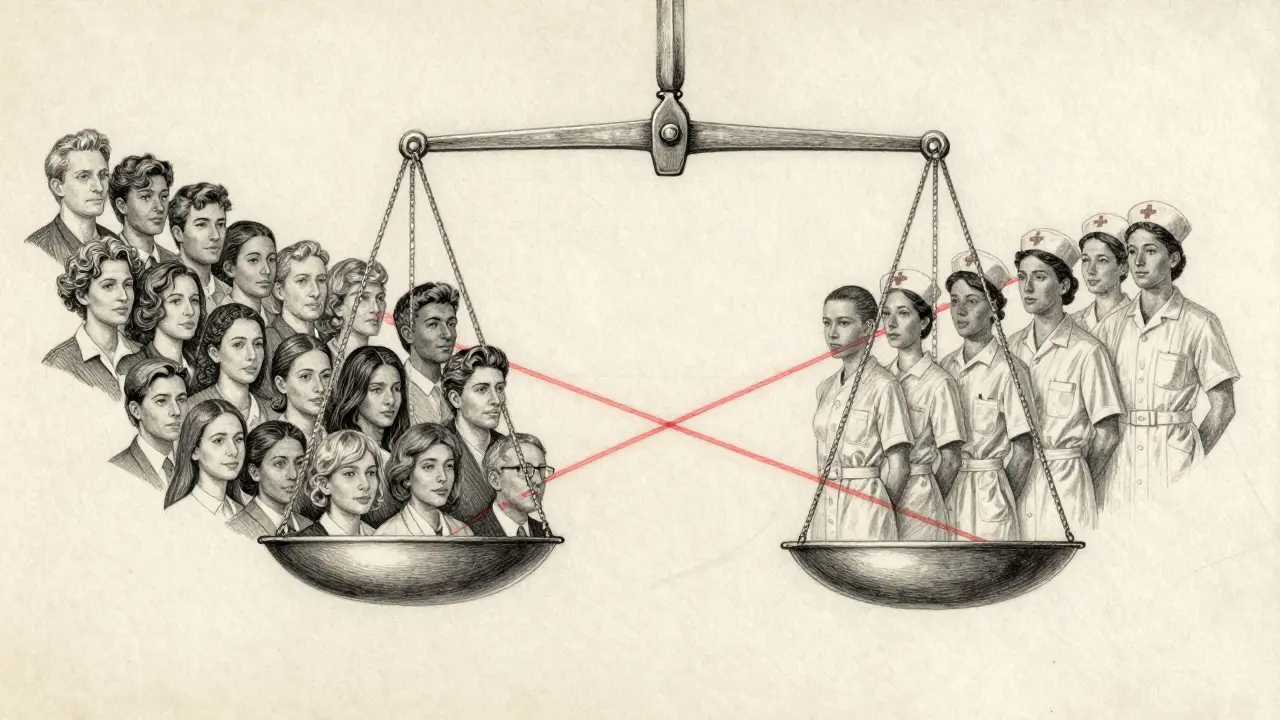

Generative AI doesn’t just answer questions-it creates images, writes essays, designs ads, and even helps decide who gets a loan or a job. But if it’s trained on biased data, it won’t just repeat mistakes. It will amplify them. That’s why fairness testing isn’t optional anymore. It’s a requirement for any organization serious about deploying these systems responsibly.

Why Fairness Testing Matters More Than Ever

In 2023, a major bank’s AI loan assistant denied applications from majority-Black neighborhoods at nearly twice the rate of other areas. The system wasn’t programmed to be racist. It learned patterns from decades of lending data where redlining and systemic discrimination had already skewed outcomes. By the time the error was caught, the bank had paid $12 million in settlements. This wasn’t an anomaly. It was a warning. Generative AI models like ChatGPT, DALL-E, and Stable Diffusion don’t just classify-they generate. And because their outputs are stochastic, two identical prompts can produce wildly different results. One user might get a professional photo of a CEO; another, with the same prompt, might get a CEO who looks nothing like them. That inconsistency isn’t a bug-it’s a feature of how these models work. But it’s also a major risk. The stakes are high. Generative AI is now used in healthcare diagnostics, hiring tools, education platforms, and public services. If the system consistently misrepresents women, people of color, or non-native speakers, it doesn’t just offend-it excludes. And exclusion leads to legal, financial, and reputational damage.Key Fairness Metrics Used Today

Fairness testing uses specific metrics to measure bias. These aren’t theoretical-they’re mathematical, testable, and increasingly required by regulators. Group fairness looks at outcomes across demographic groups. One common metric is demographic parity: does the AI give equal chances to all groups? For example, if 80% of white applicants get a positive response from a hiring tool, but only 65% of Black applicants do, that’s a red flag. Another is equalized odds, which checks whether true positives and false positives are balanced. A model might be accurate overall but still mislabel women as unqualified more often than men. Individual fairness is about consistency. If two resumes differ only in gender or name, the AI should treat them the same. This is measured using embedding similarity scores. If the cosine similarity between outputs for similar inputs drops below 0.85, the model is likely making biased distinctions. Then there’s the disparate impact ratio. Borrowed from U.S. employment law, it compares outcomes between groups. A ratio below 0.8 means the minority group is being harmed at a rate that may violate laws like NYC Local Law 144 or the EU AI Act. For example, if 90% of white users get a helpful response from a customer service bot, but only 68% of Arabic-speaking users do, the ratio is 0.76-triggering compliance concerns. But here’s the catch: these metrics don’t tell the whole story. A model might pass demographic parity but still reinforce harmful stereotypes. A 2024 Google study found that automated fairness scores only matched human judgments 63% of the time when evaluating text generation. That’s why you need more than numbers-you need audits.How to Conduct an AI Fairness Audit

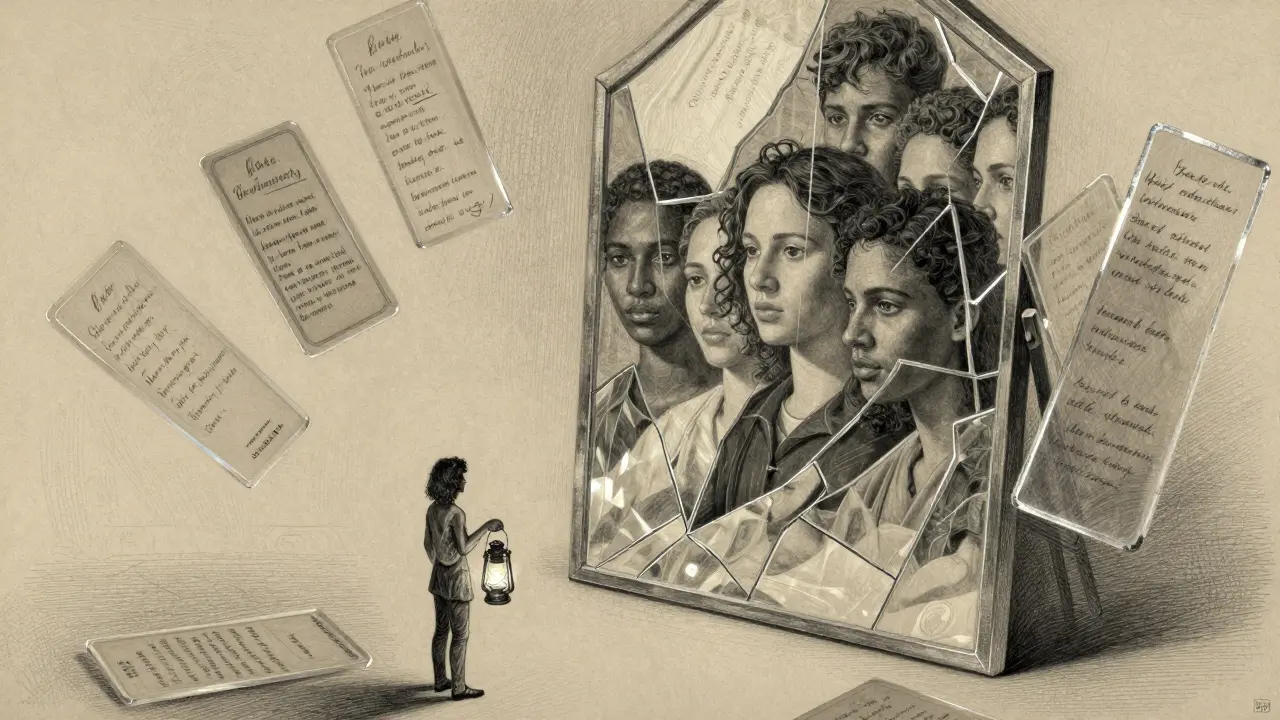

An audit isn’t a one-time check. It’s a process. Here’s how leading companies do it. First, use specialized datasets. StereoSet tests for stereotypes across gender, race, and religion using over 1,880 prompts. HolisticBias evaluates 14 identity groups with 92% agreement between human annotators. These aren’t just tools-they’re benchmarks. If your model generates “nurse” when prompted with “woman” and “engineer” when prompted with “man,” it’s failing. Second, run intersectional audits. Bias doesn’t live in single categories. It layers. IBM’s AI Fairness 360 tool found that a healthcare chatbot had a 17% error rate difference between white males and Black females when tested on one dimension. But when tested on both race and gender together? The gap jumped to 41%. That’s the power of intersectionality. Most companies still test one group at a time. That’s not enough. Third, use model cards. These are public documents that list known biases, limitations, and test results. Google’s Gemini model card, updated in February 2024, openly lists 12 bias issues-including underrepresentation of Indigenous languages. Transparency isn’t just ethical. It builds trust. Finally, bring in outside voices. Meta’s Responsible AI Community program pays diverse contributors $75/hour to test models. They found 37% more harmful outputs than internal teams. Why? Because people who live the bias see it differently. You can’t test for what you’ve never experienced.

Building a Remediation Plan

Finding bias is only step one. Fixing it is harder. The most effective remediation plans combine three approaches: data, design, and dialogue. Start with data augmentation. If your training data lacks diversity, add it. NVIDIA’s 2024 research showed that using generative adversarial networks to create synthetic images of underrepresented groups improved minority representation by 29%. That’s not magic-it’s intentional design. Next, adjust the model’s training process. Use fairness-aware loss functions that penalize biased outputs during training. Adobe’s Firefly image generator reduced skin tone bias by 62% this way, verified by third-party auditors in late 2023. Then, build feedback loops. Don’t just test once. Let users report biased outputs. Tools like Fairlearn on GitHub give developers open-source ways to collect and analyze these reports. Reddit’s r/MachineLearning community has over 147 active threads in 2024 alone discussing real-world fairness failures and fixes. And finally, train your team. A 2024 Kaggle survey found that 72% of data scientists needed specialized training to conduct fairness testing. This isn’t something you can wing. It requires understanding statistics, cultural context, and legal frameworks like the EU AI Act.What’s Changing in 2026

The landscape is shifting fast. Regulations are tightening. The EU AI Act now requires fairness testing for high-risk systems. Forty-seven U.S. states introduced AI fairness laws between 2023 and 2024. The White House updated its AI bias guidelines in November 2025, requiring quarterly audits for government-contracted systems. Adoption is rising. In 2022, only 22% of enterprises did formal fairness testing. By 2024, that jumped to 63%. Financial services and healthcare lead the way-they have the most to lose. New tools are emerging. The Partnership on AI is set to release the GENAI Fairness Benchmark in Q2 2026. Think of it like MLPerf, but for fairness. It’ll give companies a standard way to compare models. And the biggest shift? Fairness is moving earlier. In 2023, most companies tested for bias after training. Now, 81% of top AI labs integrate fairness checks during data collection. That’s smarter. It’s cheaper. And it prevents harm before it happens.

What Happens If You Don’t Act

Ignoring fairness testing isn’t a technical oversight. It’s a business risk. Forrester’s 2025 analysis shows organizations that skip fairness testing face 3.2 times higher regulatory risk. They also see 28% lower user trust. In markets with diverse populations, that means lost customers, bad press, and lawsuits. On the flip side, companies with strong fairness practices see 19% higher customer satisfaction in diverse regions. Adobe, Microsoft, and IBM aren’t doing this because it’s trendy. They’re doing it because it works. Fairness isn’t about perfection. It’s about progress. It’s about asking: Who is this system helping? Who is it hurting? And what are we willing to change to make it better?Where to Start

If you’re new to this, don’t wait for a perfect plan. Start small.- Run a basic StereoSet audit on your text generator.

- Check your disparate impact ratio across two key demographics.

- Share a draft model card with your team-even if it’s just one page.

- Invite one external reviewer from an underrepresented group to test your system.

What’s the difference between fairness testing and general AI testing?

General AI testing checks if a model works-like whether it answers questions correctly or generates clear images. Fairness testing checks if it works fairly-for everyone. It asks: Are certain groups being harmed, ignored, or stereotyped? It’s not about accuracy. It’s about equity.

Can automated tools fully detect AI bias?

No. Automated metrics can catch obvious patterns, like gender stereotypes in job descriptions. But they miss subtler harms-like tone, cultural context, or power dynamics. A model might generate a polite response to a Black user but a dismissive one to a disabled user. Humans need to interpret these results. That’s why audits require both tools and people.

Is fairness testing only for big companies?

No. Even small teams using open-source models like Llama or Stable Diffusion need to test for bias. A local clinic using an AI chatbot to triage patients can cause real harm if it misrepresents minority languages or cultural health beliefs. You don’t need a billion-dollar budget-just awareness and a willingness to check your work.

How long does fairness testing take?

For a basic audit using public tools like StereoSet and Fairlearn, expect 2-4 weeks. For a full intersectional audit with human reviewers and model cards, most mature teams take 3-6 months. The key is starting early. Testing at the end of development is expensive and risky. Integrating fairness from day one cuts time and cost.

What happens if my AI fails a fairness audit?

Failing doesn’t mean shutting down. It means learning. Many companies pause deployment, fix the issue, and retest. Adobe, for example, found skin tone bias in Firefly and fixed it before launch. The goal isn’t perfection-it’s improvement. Document what went wrong, share your fix, and move forward. Transparency turns failure into trust.

Are there free tools for fairness testing?

Yes. Fairlearn, AI Fairness 360, and StereoSet are all open-source and free to use. GitHub hosts over 4,200 stars on Fairlearn’s repo, with hundreds of contributors. You don’t need to buy software-you need to invest time. Start with these tools, run a few tests, and see what comes up.

How do regulations like the EU AI Act affect fairness testing?

The EU AI Act classifies generative AI as high-risk if used in hiring, education, or public services. That means companies must document fairness testing, show mitigation efforts, and allow audits. Fines can reach 7% of global revenue. It’s not optional-it’s a legal requirement for any system deployed in Europe. Even U.S.-based companies must comply if they serve EU users.

Can fairness testing improve my AI’s performance?

Yes. Biased models often perform poorly on diverse inputs because they’re trained on narrow data. When you fix bias, you often improve accuracy too. Adobe’s Firefly didn’t just become fairer-it became more reliable across languages and cultures. Fairness isn’t a trade-off. It’s a pathway to better, more robust AI.