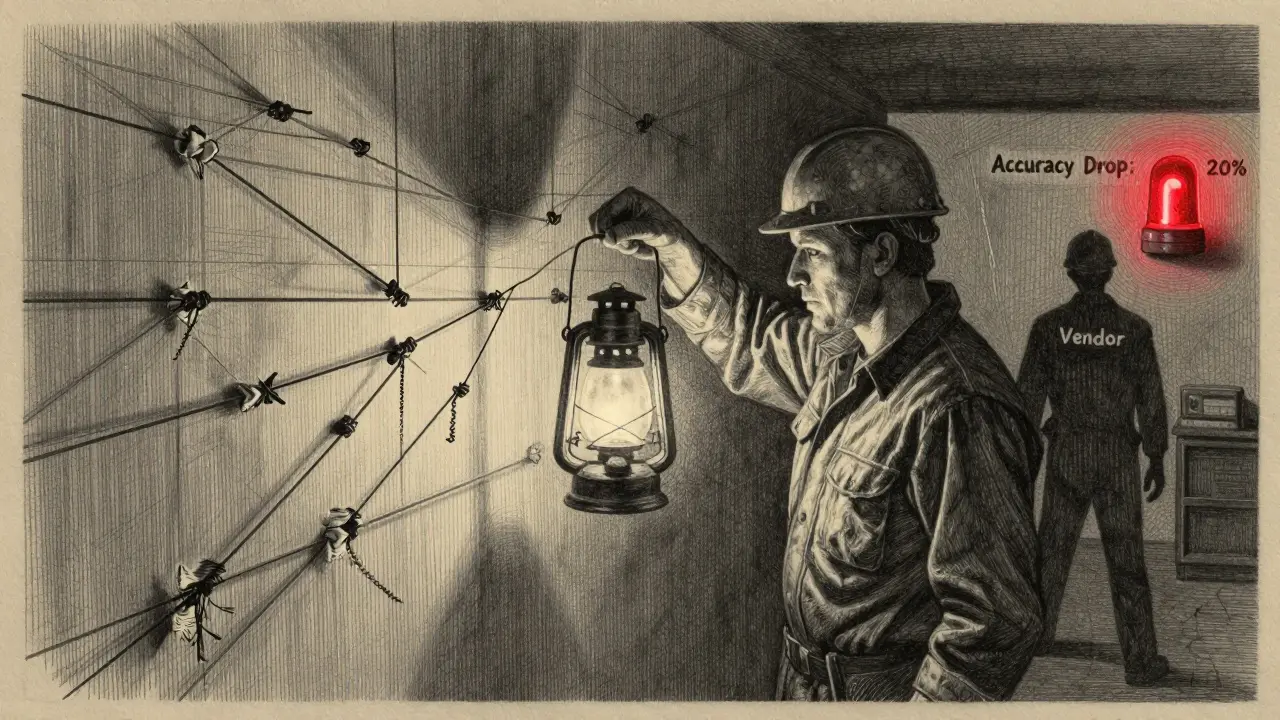

When you plug an LLM into your app, you’re not just buying a tool-you’re signing up for a relationship. And like any relationship, it evolves. Sometimes it improves. Sometimes it breaks. And if you don’t plan for that, your system could go offline with no warning. That’s not hypothetical. In early 2025, a fintech startup lost $1.2 million in trading revenue because a model they relied on quietly lost accuracy on financial jargon. No version change. No email. No deprecation notice. Just silence.

What Is LLM Lifecycle Management, Really?

LLM lifecycle management-often called LLMOps-isn’t just about updating models. It’s about knowing when they’ll change, how they’ll change, and what happens if they break. It includes tracking versions, monitoring performance drift, automating rollbacks, and planning for deprecation. According to Gartner, 78% of Fortune 500 companies now have formal LLMOps frameworks in place. Why? Because without them, LLMs become ticking time bombs.Think of it like maintaining a car. You don’t just wait for the engine to die. You check the oil, replace the tires, and schedule service before something fails. With LLMs, the stakes are higher. A 5% drop in accuracy in a customer support bot might mean frustrated users. A 20% drop in a financial model could mean lost money or regulatory fines.

API Models: Convenience Comes With Hidden Risks

If you’re using GPT-4, Claude, or Gemini through an API, you’re getting ease of use. But you’re also giving up control. And control matters when models change.OpenAI used to be the worst offender. Before June 2025, they’d update models silently. GPT-3.5-turbo changed behavior in January 2025 without a version bump. Users didn’t know until their chatbots started giving wrong answers. Now, OpenAI’s API v2025-06-12 finally introduced 180-day deprecation notices and immutable version endpoints. That’s a big step-but it came after years of complaints.

Google’s Gemini follows a clearer schedule: major versions (like 1.0 to 1.5) get 24 months of full support, then 12 months of security-only updates. Minor tweaks (like 1.5 Pro to 1.5 Pro-002) get 9 months. That’s predictable. But if you’re relying on a minor version and Google drops it early? You’re out of luck.

Anthropic’s Claude is the opposite. They don’t version at all. Instead, they push continuous updates. The model you used last month? It’s not the same today. That’s great for performance-but terrible for compliance. Financial firms and healthcare apps need to document exactly which model they used when. With no versioning, that’s impossible.

And here’s the kicker: only 33% of enterprise users feel they get enough notice about updates from proprietary providers. Anthropic scores best at 87% notification compliance. OpenAI? 62%. Google? 58%. If you’re using one of these APIs, you’re gambling on their communication habits.

Open-Source LLMs: More Control, More Work

Meta’s Llama 3 is the most popular open-source LLM. You can run it on your own servers. You control the updates. You decide when to deprecate. Sounds perfect, right?Except you now have to build LLMOps from scratch.

Organizations using Llama 3 spend 37% more engineering hours on lifecycle management than those using APIs. Why? Because you need to handle everything: training data tracking, versioning, monitoring, rollback, security patches, and compliance audits. There’s no vendor to call when your model starts hallucinating medical advice.

And documentation? Meta’s Llama docs score just 67% completeness in audits. Google’s Vertex AI? 98%. If you’re a small team, that gap can be deadly. You’ll need MLflow or LangChain to manage versions. You’ll need to set up monitoring for latency, bias, and accuracy drift. You’ll need to test every update against your use case before deploying.

But here’s the upside: you own the timeline. If your legal team says you must keep using Llama 3-7B until Q4 2026, you can. No vendor can force you to upgrade. That’s why companies like Shopify built their own “Model Guardian” system-automating tests across 42 LLM versions daily. They reduced lifecycle overhead by 64%. But it took them months to build it.

Deprecation Isn’t Just a Feature-It’s a Legal Requirement

In 2025, it’s not optional to manage deprecation. The EU AI Act requires documented lifecycle management for high-risk AI systems. The U.S. NIST AI Risk Management Framework mandates audit trails for every model interaction. If you can’t prove which version you used when, you could face fines.MIT’s AI lab found 147 cases where deprecated models were still running in production 9+ months after being pulled. That’s not negligence-it’s systemic. Companies assume “it’ll keep working.” It doesn’t. Models degrade. Data shifts. Security holes appear.

Gartner estimates this “deprecation debt” costs enterprises $2.1 billion annually. Financial services are hit hardest. Banks can’t afford to use a model that suddenly misreads loan terms. Retailers? They might get away with it. But if your model handles insurance claims or medical triage? You’re on the hook.

What You Should Do Right Now

If you’re using LLMs in production, here’s your checklist:- Map your models. List every LLM you’re using-API or open-source. Note the version. Where is it deployed? Who owns it?

- Check deprecation policies. OpenAI: 180-day notice. Google: 12-24 months. Anthropic: none. Llama: you decide. Know your exposure.

- Set up monitoring. Track at least 10 metrics: latency, accuracy, bias, cost, and drift. Use MLflow or Vertex AI’s Model Health Scores. If accuracy drops below 92%, trigger an alert.

- Build rollback plans. Can you revert to a previous version in 15 minutes? If not, you’re at risk. Test this monthly.

- Document everything. Training data sources. Hyperparameters. Version history. Audit trails. If you’re audited tomorrow, can you prove compliance?

And here’s the hard truth: if you’re using an API without versioning, you’re not managing risk-you’re outsourcing it. And vendors don’t care about your downtime. They care about their next release.

The Future: Standardization Is Coming

The LLM Lifecycle Management Consortium, launched in May 2025, includes 47 companies working on common deprecation standards. The Linux Foundation’s Model Lifecycle Specification v1.0 is already shaping how tools like MLflow handle versioning.By 2026, Gartner predicts 90% of enterprises will require standardized deprecation timelines in vendor contracts. That means if you’re buying an API and they won’t give you a 90-day notice? You walk away.

Open-source will keep growing-not because it’s easier, but because it’s the only way to truly own your model’s future. The trade-off? More work now, less surprise later.

Final Thought: Control vs Convenience

APIs are fast. Open-source is safe. Neither is perfect. But if you’re building something that matters-something that affects people’s money, health, or safety-you can’t afford to wait for a vendor to notify you that your model is dead.Plan for deprecation like it’s a scheduled maintenance window. Because one day, it will be.

What happens if I don’t manage LLM deprecation?

Your system could fail without warning. Models degrade over time due to data drift, security vulnerabilities, or sudden API changes. In 2025, 63% of production LLMs experienced performance drops within six months without monitoring. Some companies lost millions when deprecated models stopped working. Regulatory fines and compliance failures are also common if you can’t prove which model version was used when.

Which is better: API or open-source LLMs for lifecycle management?

It depends on your needs. APIs (like GPT-4 or Gemini) offer ease of use and built-in monitoring but come with unpredictable deprecation timelines and no version control. Open-source (like Llama 3) gives you full control over updates and deprecation but requires you to build your own LLMOps pipeline. If you need compliance, audit trails, and long-term stability, open-source is better. If you need speed and don’t have engineering bandwidth, APIs are faster-but riskier.

How long do LLMs usually last before being deprecated?

It varies by provider. OpenAI now offers 180-day notice before deprecation. Google gives 24 months for major versions, 12 months for security updates. Anthropic doesn’t deprecate versions-they update continuously. Open-source models like Llama 3 last as long as you maintain them. Most enterprise contracts now require at least 12 months of support before deprecation.

Can I trust LLM providers to notify me about updates?

No-not reliably. A 2024 Stanford study found only 33% of enterprise users felt they got adequate notice. Anthropic scores highest at 87%, but OpenAI and Google lag at 62% and 58%. Many companies have been burned by silent model changes. Always assume you’ll get no warning. Build monitoring and versioning into your system, not your expectations.

What tools help manage LLM lifecycles?

For open-source: MLflow (12,400+ GitHub stars) and LangChain handle versioning and tracking. For APIs: Google’s Vertex AI, AWS Bedrock, and Azure AI offer built-in monitoring and rollback. WhyLabs and Arize provide third-party monitoring for any model. If you’re using Llama 3, combine MLflow with Prometheus for metrics and Grafana for dashboards. The key is automation-test every update before deployment.

Is LLMOps only for big companies?

No. Even small teams need basic lifecycle management. You don’t need 16 A100 GPUs. Start with version tracking in MLflow, monitor accuracy and latency with free tools like Prometheus, and set up alerts if performance drops 10%. If you’re using an API, always pin to a version (e.g., gpt-4-0613). The goal isn’t perfection-it’s avoiding surprise failures.