Why Your Multimodal AI Needs Human Review

Imagine an AI generates a medical report that says a tumor is benign, backed by a scan image and voice summary-all perfectly formatted, no typos, no glitches. But the tumor is actually malignant. The AI didn’t make a mistake. It just got the context wrong. This isn’t science fiction. It’s happening in hospitals right now.

Multimodal generative AI combines text, images, audio, and video to create outputs that feel real, even when they’re wrong. These systems don’t think like humans. They stitch together patterns from massive datasets. And because they work in hidden, shared spaces where text and images blend, you can’t just ask them, "Why did you say that?"

Automated checks won’t catch this. A rule-based system might flag a misspelled word. But it won’t notice that the image of a lung scan doesn’t match the text description. That’s where human review comes in-not as a backup, but as a necessary layer of defense.

The Hidden Flaws in Flawless Outputs

The biggest danger isn’t obvious errors. It’s what experts call "highly fluent, visually coherent, and context-sounding" outputs that are still wrong. A pharmaceutical company using AI to analyze lab results might get a perfectly written summary that mislabels a chemical compound. A manufacturing line using AI to inspect products might approve a cracked circuit board because the image looks "normal" to the model.

These aren’t bugs. They’re blind spots in the AI’s training. Models like CLIP, FLAVA, and Gemini Pro are built to find patterns, not truth. They learn from what’s common, not what’s correct. And when multiple modalities are involved-say, a video of a machine humming, a sensor reading, and a text log-the AI can generate a convincing story that ties them all together… even if the story is false.

According to TetraScience’s 2024 pilot in biopharma, traditional automated QC systems catch only 70-75% of errors. Human-reviewed multimodal systems hit 90%. That 15-20% gap? That’s where the real risks live.

What Makes a Good AI Quality Checklist?

A good checklist doesn’t just say "Check the image." It asks: "Does the image match the text? Is the source data traceable? Are the labels consistent across modalities?"

TetraScience’s approach, built around the 5M QC framework (man, machine, method, material, measurement), shows how to structure this:

- Man: Who reviews? Are they trained on the ontology? Do they know the domain?

- Machine: What AI tools generated the output? Were they grounded in verified sources?

- Method: What steps were followed to generate and verify? Was there a reasoning chain?

- Material: What data was used? Is it from a trusted, labeled source?

- Measurement: How do you measure success? F1 score? Error rate? Regulatory compliance?

Each item on the checklist should map to one of these. For example:

- Verify that every text claim in the output has a corresponding image, audio, or sensor reading that supports it.

- Confirm that all visual elements use the same scale, orientation, and labeling convention.

- Check that timestamps across video, audio, and sensor logs align within ±0.5 seconds.

- Ensure that any entity (e.g., drug name, part number) appears identically across all modalities.

- Trace each output back to its original input data-no ungrounded claims allowed.

These aren’t suggestions. They’re requirements in regulated industries. The FDA now requires human-in-the-loop verification for all AI-generated content in biopharma submissions.

How to Build a Review Workflow That Actually Works

Setting up human review sounds simple. You just hire people to look at outputs, right? Wrong.

One quality engineer at Siemens tried reviewing 150 AI-generated reports a day. After two weeks, their error detection rate dropped from 92% to 67%. Why? Alert fatigue. When your brain is trained to spot problems, and you see 100 perfect-looking outputs in a row, your brain stops looking.

The solution? Prioritize.

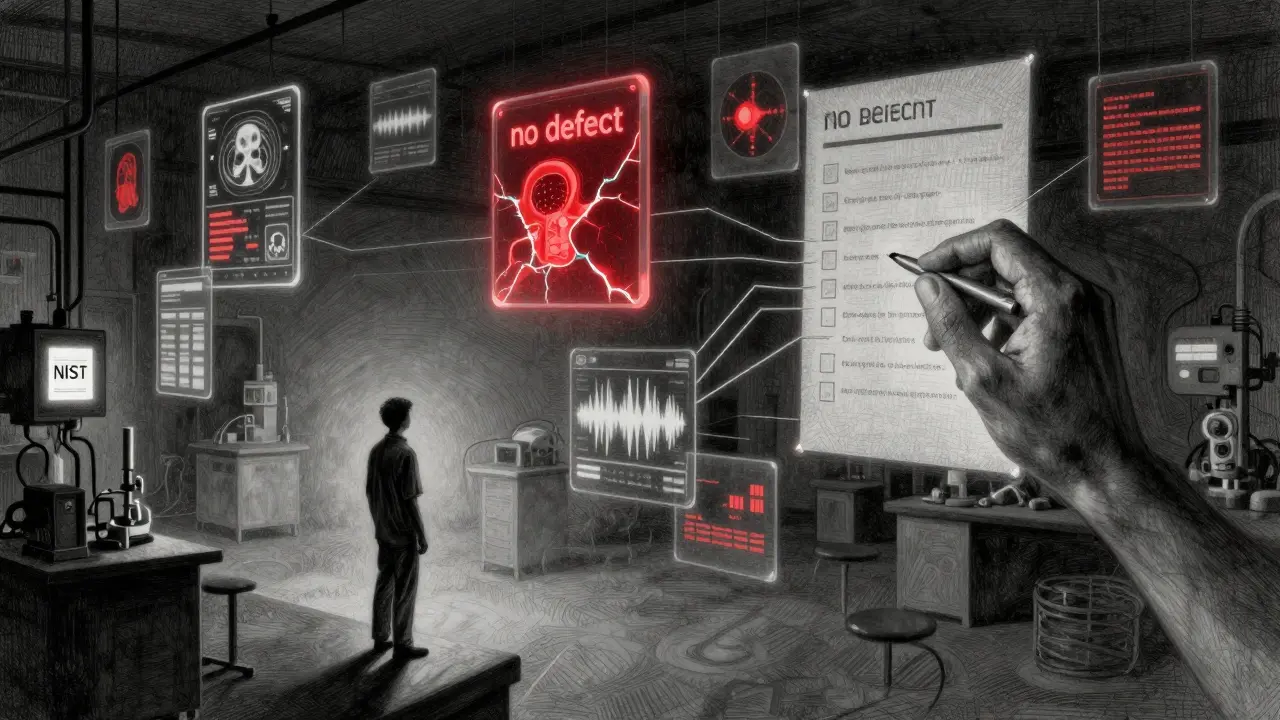

Use AI to score outputs by risk. Flag anything that:

- Has conflicting signals between modalities (e.g., text says "no defects," image shows cracks)

- Uses low-confidence sources (e.g., user-uploaded images without metadata)

- Generates new entities not in your approved ontology (e.g., a made-up drug name)

- Has no traceable input data

Only send high-risk items to humans. AuxilioBits found this cut review volume by 45%-while keeping defect detection at 99.2%.

Also, give reviewers tools. Real-time reasoning chain visualization (like the one TetraScience launched in October 2024) lets reviewers see: "This output was generated from Image A, Audio B, and Text C. Here’s how the model interpreted each. Here’s the final decision path." That cuts review time by 43%, according to Aalto University.

When Human Review Doesn’t Work

Human review isn’t magic. It has limits.

First, you need clear, stable sources of truth. If your training data is messy, outdated, or incomplete, no human can fix that. You can’t verify what doesn’t exist.

Second, it doesn’t scale for high-volume, low-margin tasks. If you’re generating 10,000 product images an hour for an e-commerce site, human review isn’t cost-effective. Automated filters, outlier detection, and confidence thresholds are better here.

Third, bias creeps in. MIT’s 2025 AI Ethics Report warns that without standardized checklists, human reviewers can unconsciously favor outputs that match their expectations. One reviewer might trust a certain brand’s scan more than another’s. That’s not quality control. That’s amplifying bias.

And fourth, training takes time. TetraScience spent 3-6 months building ontologies before even touching an AI model. That’s not something you rush.

What Industries Are Doing This Right

Biopharmaceuticals lead the pack. Why? Because the cost of error is life or death. The FDA’s 2024 guidance made human review mandatory. Companies using TetraScience’s system saw a 63% drop in regulatory non-conformances.

Manufacturing is close behind. AuxilioBits’ case studies show multimodal AI with human review catching defects traditional machine vision missed-like micro-cracks in turbine blades, or misaligned wiring in circuit boards. False negatives dropped by 37%.

Consumer tech? Not so much. Apps that generate memes or edit photos don’t need this level of rigor. But if you’re building AI for legal documents, medical imaging, or industrial automation-this isn’t optional. It’s compliance.

By 2025, 65% of enterprises will use hybrid verification (AI + human), up from 22% in 2024, according to Gartner. That’s not a trend. It’s a requirement.

What You Need to Get Started

You don’t need a team of 20 data scientists. But you do need three things:

- A defined domain ontology-a shared dictionary of terms, relationships, and rules. What’s a "defect" in your context? What’s a "valid" sensor reading? Write it down.

- A verification pipeline-a system that logs every input, how it was processed, and how the output was generated. No black boxes.

- A human review checklist-simple, clear, and tied to your ontology. Test it with real users. Fix it when it’s confusing.

Start small. Pick one high-risk output type. Maybe it’s the AI-generated safety report for your factory floor. Build the checklist. Train three reviewers. Run it for a month. Measure the errors you catch. Then expand.

And don’t use open-source templates unless they’re from a trusted source. GitHub checklists average a 3.1/5 rating for clarity. TetraScience’s framework? 4.5/5. You’re not saving time by copying something vague.

What’s Coming Next

NIST is rolling out its AI Verification Framework (Version 2.0) in Q2 2025. It will standardize multimodal output checks across seven critical dimensions-like consistency, traceability, and bias detection. If you’re building this now, you’re ahead of the curve.

Meta AI’s November 2024 update now flags 89% of risky outputs before they even reach a human. That’s huge. It means reviewers aren’t drowning in noise anymore.

But the real win? By 2027, Gartner predicts 85% of enterprise multimodal AI deployments will require human review for high-risk outputs. The question isn’t whether you’ll need it. It’s whether you’ve built it right.

Final Thought: Trust, But Verify

Multimodal AI is powerful. But power without oversight is dangerous. The goal isn’t to replace humans. It’s to make them better. A checklist isn’t bureaucracy. It’s a safety net. A review process isn’t slow-it’s smart.

AI can generate a thousand reports in a minute. But only a human can ask: "Does this make sense? Is this true? And what happens if we’re wrong?"

That’s the edge you can’t automate.