When you use AI to write code-really fast, with simple prompts like "make a login form that saves emails"-you’re not just saving time. You’re also inviting legal risk. Vibe coding, where developers talk to AI tools like GitHub Copilot or ChatGPT to generate code on the fly, is now used by 78% of developers. But only 22% of teams have a legal review process for when that code touches customer data. That gap is costing companies millions.

Why Vibe Coding Creates Legal Blind Spots

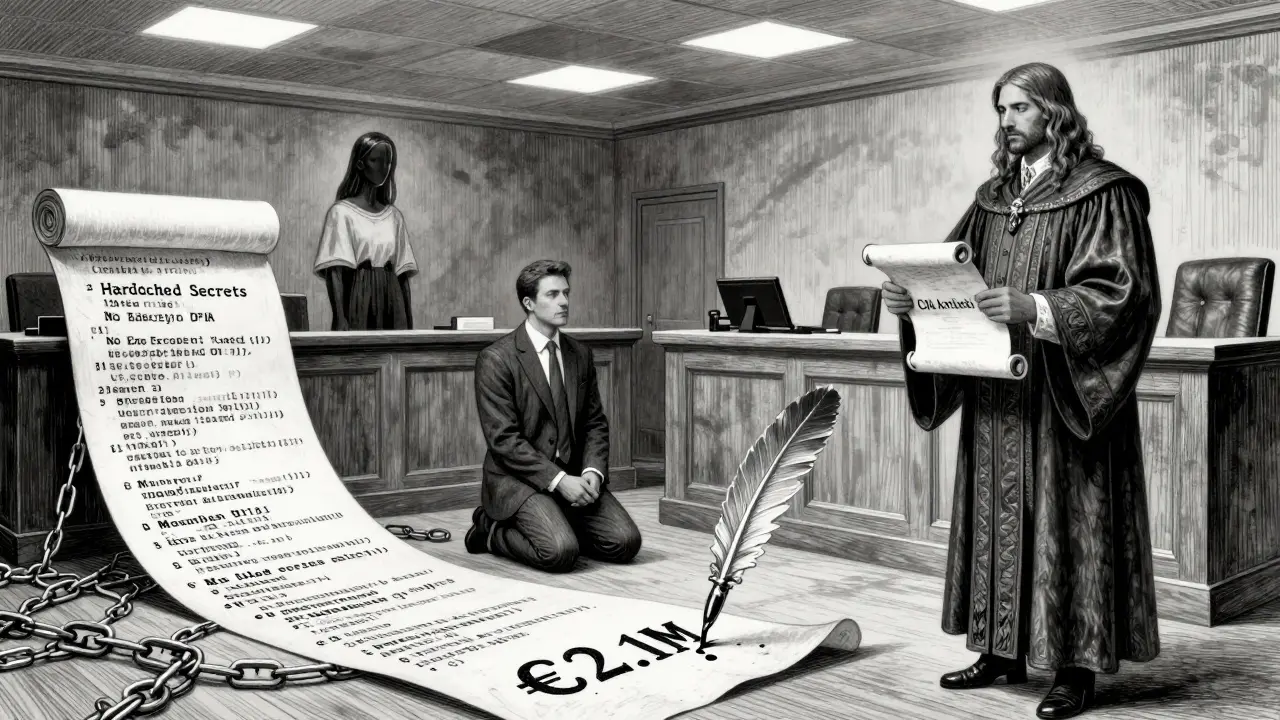

Traditional code is written by humans. You know what it does. You can trace every line back to a decision. Vibe-coded code? It’s generated in seconds by an AI trained on billions of lines of public code. You don’t know if it copied a snippet from a GPL-licensed project. You don’t know if it’s silently collecting user emails. You don’t know if it’s storing data in an unencrypted buffer. The EU’s Cyber Resilience Act (CRA), which took effect July 1, 2025, changed everything. Now, if your AI-generated code has a vulnerability that leaks customer data, you are legally responsible-even if you didn’t write it. The same applies under GDPR. If your app collects personal data without clear consent, and that code came from an AI prompt, you’re still liable. The AI didn’t break the law. You did, by deploying it without checking. A German e-commerce company learned this the hard way in late 2025. They used GitHub Copilot to build a checkout flow. The AI generated code that collected email addresses and IP addresses but didn’t ask for consent. When a user filed a complaint, the EU fined them €2.1 million. The company didn’t even realize the code was collecting that data until the audit.What You Must Review Before Launch

You can’t skip legal review just because the code was fast to write. Here’s what you need to check for every vibe-coded feature that touches customer data:- Data collection points: Where does the code collect, store, or transmit personal data? AI tools often add tracking pixels, cookies, or API calls you didn’t ask for. A study found 4.7 hidden data collection points per 1,000 lines of AI-generated code.

- Data storage: Is data encrypted? The minimum standard is AES-256. Many AI-generated snippets use weak encryption or store data in plaintext logs.

- Access controls: How many people can access the data? AI code often creates overly permissive database roles. Limit access to no more than three privilege levels.

- Data retention: How long is data kept? GDPR and CCPA require deletion after no more than 180 days for non-essential data. AI tools rarely include auto-deletion logic.

- Third-party integrations: Does the code call external APIs? Many AI-generated snippets hardcode API keys, secrets, or tokens. A GuidePoint Security audit found 63% of vibe-coded apps had hardcoded credentials.

- Consent mechanisms: Does the app ask for permission before collecting data? Apple and Google now require users to see a privacy notice before any data is processed. AI tools often generate placeholder text like "User consents" without actual UI.

- Export controls: Does the code use encryption algorithms that are regulated? Some AI tools generate code with strong encryption (like AES-256 or RSA-4096) that falls under U.S. export controls. Deploying it without a license can trigger a Commerce Department investigation.

The 9-Step Legal Review Process

The Cybersecurity Alliance (CSA) and legal experts recommend this workflow:- Map all data touchpoints: Use automated tools like Snyk AI to scan the code for data flows. It catches 82% of hidden collection points.

- Identify the legal jurisdiction: Are users in the EU, California, Brazil? Each region has different rules. GDPR, CCPA, and LGPD all apply differently.

- Perform a DPIA (Data Protection Impact Assessment): Required under GDPR Article 35 for any AI-generated system processing personal data. This isn’t optional.

- Verify consent mechanisms: Manually test the UI. Can users opt out? Is the notice clear? Is consent recorded?

- Check for hardcoded secrets: Run a secret scanner (like TruffleHog or GitLeaks) on the codebase. Remove any keys, passwords, or tokens.

- Review data retention logic: Does the code delete data after 180 days? If not, add it.

- Validate third-party dependencies: Check if any libraries in the AI-generated code have known CVEs or restrictive licenses.

- Document everything: AI code rarely includes comments. Write your own data flow diagrams, privacy notices, and compliance logs.

- Get legal sign-off: Have a privacy lawyer or compliance officer approve the feature before deployment. Allocate at least 22 hours per feature for this step.

Where Vibe Coding Fails Most

Some industries are far riskier than others. In healthcare, AI-generated code for patient portals had a 92% non-compliance rate with HIPAA in a December 2025 FDA audit. Why? The AI didn’t understand PHI (protected health information) rules. It didn’t know encryption wasn’t enough-access logs, audit trails, and patient rights workflows are required. In finance, 76% of AI-coded payment systems failed PCI DSS compliance. The AI kept generating code that stored full credit card numbers in logs or used outdated TLS versions. Even worse, it generated fake privacy policies. A J.P. Morgan study found 89% of AI-generated privacy policies contained false data flow descriptions. Users were misled. Companies were fined. And don’t assume enterprise tools are safer. Only 17% of companies use vibe coding for customer-facing apps. Most stick to internal tools. Why? Legal teams won’t sign off on public-facing AI code without a strict review process.What’s Changing in 2026

Regulators are catching up. On January 15, 2026, the EU AI Office announced it will begin targeted audits of AI-generated code in customer apps starting March 1. They’ll check for:- Missing DPIAs

- Unencrypted data

- Hardcoded secrets

- Non-compliant consent flows

How to Get It Right

LuminPDF, a PDF tool company, turned this around. They built a 14-step legal review checklist. They trained developers on IAPP’s AI Privacy Professional certification. They hired a compliance engineer to audit every AI-generated feature. Within six months, their compliance issues dropped by 89%. You don’t need a huge team. Start with this:- Use Snyk AI or CodeQL to scan for data leaks and secrets.

- Require every vibe-coded feature to have a DPIA before code review.

- Block hardcoded secrets with pre-commit hooks.

- Train your team on GDPR, CCPA, and CRA basics.

- Make legal sign-off mandatory-not optional.

What Happens If You Ignore This?

Under GDPR Article 83(5), fines for violating data protection rules in AI systems can reach €20 million or 4% of global turnover-whichever is higher. That’s not a warning. That’s a bankruptcy risk. A U.S. fintech startup was fined $18 million in 2025 after an AI-generated feature leaked customer Social Security numbers. The CEO claimed, "We didn’t write that code." The court didn’t care. The company shut down six months later. You can’t outsource responsibility to an AI. The law holds the developer, the product owner, and the company accountable. Not the machine.Do I need a lawyer to review AI-generated code?

Yes-if the code touches customer data. Legal teams aren’t just for contracts anymore. They need to review AI-generated code for compliance with GDPR, CCPA, CRA, and other regulations. A single missed consent flow or hardcoded secret can trigger a €20 million fine. Don’t rely on developers alone. Involve a privacy lawyer before launch.

Can I use AI to write my privacy policy?

No. AI-generated privacy policies are dangerously inaccurate. A J.P. Morgan study found 89% of them misrepresent how data is collected or used. Regulators consider this deceptive. Always have a human privacy lawyer draft or review your policy. AI can help draft a first version, but never deploy it without legal validation.

Is vibe coding banned in regulated industries?

No, but it’s heavily restricted. Healthcare and finance sectors have near-zero tolerance for unreviewed AI code. In 2025, 92% of AI-generated healthcare apps failed HIPAA audits. You can use vibe coding, but only if you follow a strict 9-step legal review process and document every step. Most companies in these industries avoid it entirely unless they have dedicated compliance teams.

What tools can help me audit vibe-coded code?

Use Snyk AI to detect hidden data flows and secrets (it finds 82% of issues). Combine it with TruffleHog for hardcoded credentials, OWASP ZAP for API security testing, and GitLeaks for repository scans. For compliance documentation, use tools like OneTrust or TrustArc to auto-generate DPIAs-but always have a human verify them.

How long should a legal review take for vibe-coded code?

At least 22 hours per feature, according to Debevoise & Plimpton’s AI Practice Report. That’s three times longer than reviewing traditional code. Why? You’re not just checking for bugs-you’re mapping data flows, verifying consent, checking encryption, and ensuring documentation matches reality. Rushing this step is the most common cause of fines.

Will AI tools get better at compliance soon?

Not without pressure. GitHub’s security lead says AI can improve compliance-but only if developers use it correctly. Right now, most AI tools generate code that ignores legal requirements. They’re optimized for speed, not safety. Until regulators mandate compliance as a core feature (like the EU’s Digital Product Passport), you can’t rely on AI to be compliant. You must audit it yourself.