Most large language models (LLMs) are trained on general text-books, websites, articles. But if you ask them to read a medical chart, parse a legal contract, or analyze financial reports, they often stumble. Why? Because they don’t know where to look. Attention patterns are the secret sauce that tells a model which parts of the input matter most. When you optimize these patterns for a specific domain, you’re not just teaching it new words-you’re rewiring how it thinks.

What Attention Patterns Really Do

Transformers rely on attention mechanisms to weigh the importance of each word in a sentence. In a general-purpose model, attention might spread evenly across terms like "patient," "symptom," "medication," and "dosage." But in a medical context, "hypertension" should trigger a cascade of related concepts: blood pressure, ACE inhibitors, renal function. A generic model doesn’t naturally learn those links. It sees them as separate words. Optimizing attention means forcing the model to focus its computational power on the right relationships. Think of it like training a detective to ignore street noise and zero in on fingerprints at a crime scene. The model still sees everything-but it learns to ignore 80% of it and lock onto the 20% that matters.How It’s Done: Four Main Approaches

There’s no single way to tweak attention. Four methods dominate real-world use:- Dynamic knowledge injection: The model pulls in domain-specific context during inference. For example, when it sees "aspirin," it automatically recalls drug interaction tables from a medical database.

- Static knowledge embedding: Domain knowledge is baked into attention weights during training. This is common in models fine-tuned on curated legal documents-terms like "consideration" or "force majeure" get higher attention scores by default.

- Modular adapters: Small, trainable layers are added between transformer blocks to redirect attention flow. These act like traffic cops, guiding focus toward domain-relevant patterns without changing the core model.

- Prompt optimization: The input itself is structured to nudge attention. Instead of "Explain this contract," you write: "Identify clauses related to liability and termination in this legal document." The model learns to associate those phrases with high-priority attention.

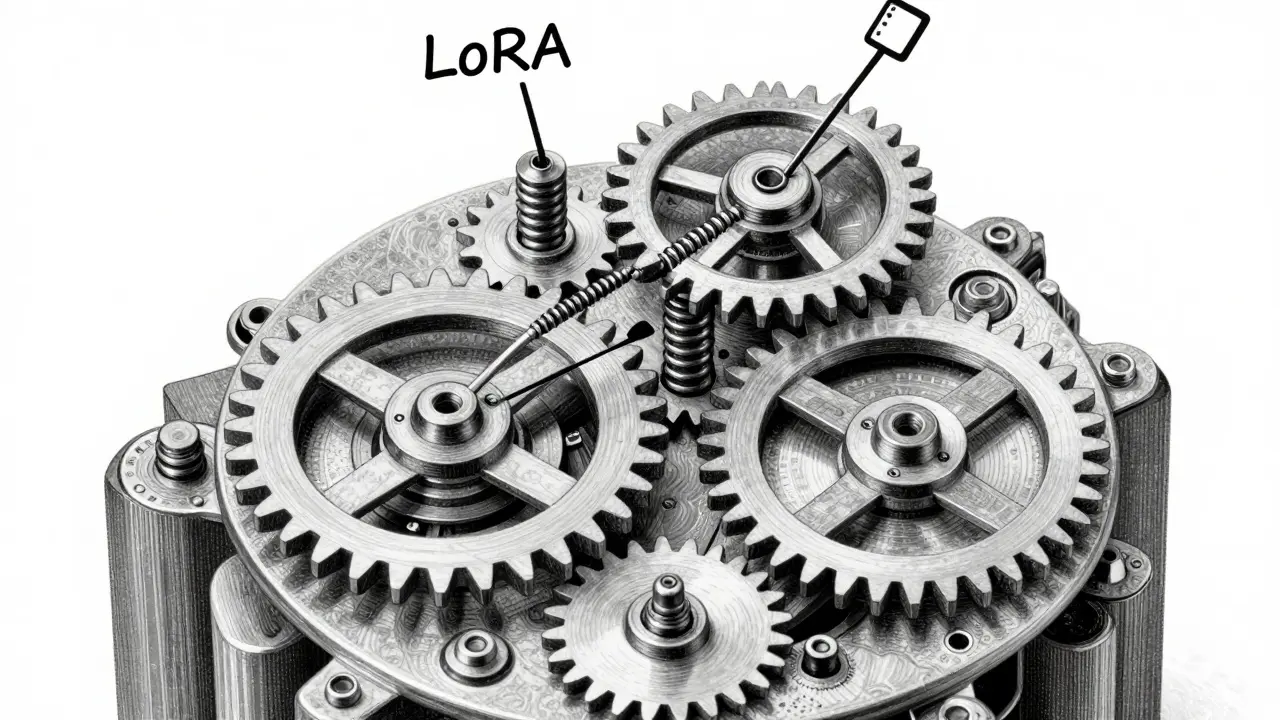

The most popular method? LoRA (Low-Rank Adaptation). It doesn’t retrain the whole model. Instead, it adds tiny, trainable matrices to the query, key, and value projections inside attention layers. These matrices adjust how attention scores are calculated-like fine-tuning a camera lens instead of replacing the whole camera.

Why LoRA Dominates Real-World Use

Full fine-tuning means retraining billions of parameters. That costs tens of thousands of dollars and weeks of GPU time. LoRA updates only 0.1% to 3% of parameters-and most of those are in attention layers. A healthcare startup in Boston cut their fine-tuning costs from $28,000 to $1,200 per model iteration using LoRA on medical notes. They kept the original GPT-4 weights and just tweaked the attention paths that handle symptoms, diagnoses, and drug names.Performance gains are real. Benchmarks show domain-specific attention optimization improves accuracy by 15% to 35% on tasks like medical question answering or contract clause extraction. At the same time, inference speed improves by 60% to 80% because the model doesn’t waste cycles on irrelevant context.

The Trade-Off: Specialization Comes at a Cost

Here’s the catch: the more you optimize attention for one domain, the worse the model gets at everything else. A model fine-tuned to read legal contracts might score 92.3 on MedQA (a medical benchmark) but drop 18.7 points on GLUE, a general language understanding test. Why? Because its attention heads are now locked into legal jargon. When it sees "consideration," it doesn’t think about the concept-it thinks about contract clauses. That’s useful in law, disastrous in casual conversation.Some models become brittle. Google Health’s Med-Gemini scored 92.1 on standard medical questions but crashed on rare conditions. Why? Its attention pattern had learned to focus on common symptoms and treatments. When faced with something unusual, it didn’t know where to look. It was too specialized.

When It Works Best-and When It Doesn’t

Attention optimization shines in domains with:- Clear, stable terminology (e.g., medical codes, legal statutes)

- Structured input formats (e.g., patient records, financial statements)

- High-stakes outcomes where accuracy matters more than flexibility

That’s why healthcare and legal sectors lead adoption. In healthcare, 38% of domain-specific LLMs use attention optimization. In legal tech, it’s 27%. A law firm in Chicago reported a 40% speed boost in contract review after tuning attention to identify indemnity clauses, arbitration terms, and governing law sections.

But it fails when:

- Domains are messy or evolving (e.g., social media trends, news headlines)

- Data is noisy or unstructured (e.g., patient notes with typos, handwritten legal drafts)

- The model needs to switch between contexts (e.g., a customer service bot handling both billing and technical support)

One AI researcher on Hugging Face spent three months trying to optimize attention for financial news before giving up. "We didn’t have clean, consistent data," they wrote. "The model kept fixating on ticker symbols and ignoring market sentiment. We switched to RAG-and it worked in two weeks."

How to Implement It: A Practical Five-Step Guide

If you’re serious about optimizing attention, here’s how to start:- Analyze domain needs: Use tools like BertViz to visualize how your current model attends to input. Where does it get distracted? Which words trigger strong attention? In legal text, you might notice attention spikes on "shall," "therefore," or "pursuant to." That’s your signal.

- Choose your method: For most teams, start with LoRA. It’s supported by Hugging Face’s PEFT library and requires minimal code changes. Use modular adapters only if you need to swap attention behavior on the fly.

- Set rank parameters: LoRA’s rank determines how much it changes attention. Start with rank 8 or 16. Too low (like 2) and the effect is weak. Too high (like 64) and you’re almost doing full fine-tuning.

- Train with clean, focused data: Use domain-specific corpora with high signal-to-noise. Don’t throw in random web text. For medical models, use PubMed abstracts, clinical trial reports, or annotated EHRs. For legal, use court opinions or standardized contract templates.

- Validate attention behavior: Run diagnostic tests. Give the model sentences with domain terms embedded in noise. Does it still focus on the right words? Check for "attention collapse"-when one head dominates and ignores context. Use Hugging Face’s diagnostic tools to spot imbalanced heads.

Common Pitfalls and How to Fix Them

- Attention collapse: One attention head starts doing all the work. Fix: Use diversity loss during training to encourage heads to specialize differently.

- Context bleeding: Domain attention leaks into general tasks. Fix: Mix domain data with general text during training. This keeps the model from forgetting how to chat or answer open-ended questions.

- Overfitting to training data: The model only works on the exact examples it saw. Fix: Add synthetic variations-reword sentences, swap synonyms, introduce typos.

GitHub’s PEFT library has 147 open issues related to attention tuning as of late 2024. Most are about these exact problems. The community’s advice? Start small. Test one domain. Don’t try to optimize for both medical and legal at once.

The Future: Hybrid Systems Are Winning

The most successful models aren’t just optimized for attention-they combine it with other techniques. OpenAI’s December 2024 update introduced "attention-guided retrieval," where attention patterns direct the model to pull in external data only when needed. In finance, this means the model uses its tuned attention to detect "earnings call" or "SEC filing," then retrieves the latest report to answer questions.Google’s new Domain-Adaptive Attention Modules (DAAM) do something similar: they dynamically reconfigure attention heads based on input signals. If the model sees a medical term, it switches to a medical attention profile. If it sees a stock ticker, it flips to a financial one.

Microsoft Research’s attention pruning technique cuts 40% of parameters without losing accuracy. That’s huge for edge devices and low-power systems.

Who’s Leading the Way?

NVIDIA dominates the hardware side-78% of attention optimization projects run on their GPUs. Hugging Face leads the software side, used in 65% of implementations. Their PEFT library, updated monthly, is the go-to toolkit for engineers.Regulations are catching up. The EU AI Act now requires transparency in attention mechanisms for medical AI. Companies can’t just say "it’s a black box." They have to show how attention works. That’s pushing more teams toward interpretable methods like LoRA over opaque approaches.

Final Thought: It’s Not About Bigger Models. It’s About Smarter Focus.

The race isn’t to build bigger LLMs. It’s to make smaller ones smarter in specific areas. Optimizing attention patterns lets you take a general-purpose model and turn it into a domain expert-without the cost of retraining everything. But it’s not magic. It requires clean data, careful validation, and an understanding of how attention really works. If you do it right, your model won’t just answer questions. It’ll know where to look.What’s the difference between attention optimization and full fine-tuning?

Full fine-tuning updates every parameter in the model, which can cost tens of thousands of dollars and require weeks of training. Attention optimization, like LoRA, only tweaks a tiny fraction-usually under 3%-and focuses only on the attention layers. It’s faster, cheaper, and often just as accurate for domain-specific tasks.

Can I use attention optimization on any LLM?

Only transformer-based models, like GPT, BERT, Llama, or Mistral. Models that don’t use attention mechanisms-like older RNNs or CNNs-can’t benefit from this approach. Most modern LLMs are transformers, so this applies to nearly all current systems.

Does attention optimization work with small models?

Yes. In fact, it’s often more effective on smaller models. A 7B-parameter model with optimized attention can outperform a 70B model with generic attention on domain-specific tasks. The key isn’t size-it’s focus.

Why do some teams prefer RAG over attention optimization?

RAG (Retrieval-Augmented Generation) pulls in external data on the fly, so it doesn’t need to retrain the model. It’s more flexible for changing domains and doesn’t risk over-specialization. But it’s slower and less precise. Attention optimization is faster and more accurate when the domain is stable and data is clean.

How long does it take to implement attention optimization?

For engineers familiar with transformers, expect a 6- to 12-week learning curve. Setting up LoRA with Hugging Face might take a day, but tuning attention behavior, diagnosing imbalances, and validating results takes weeks. Most teams underestimate the time needed for data cleaning and attention diagnostics.

Is attention optimization used in production today?

Yes. In healthcare and legal tech, it’s standard. Companies like Philips, Cerner, and legal AI startups use it to power contract analysis, clinical decision support, and regulatory compliance tools. Adoption grew from 12% in 2022 to 47% in 2024, though it still lags behind RAG, which is used in 63% of cases due to its flexibility.